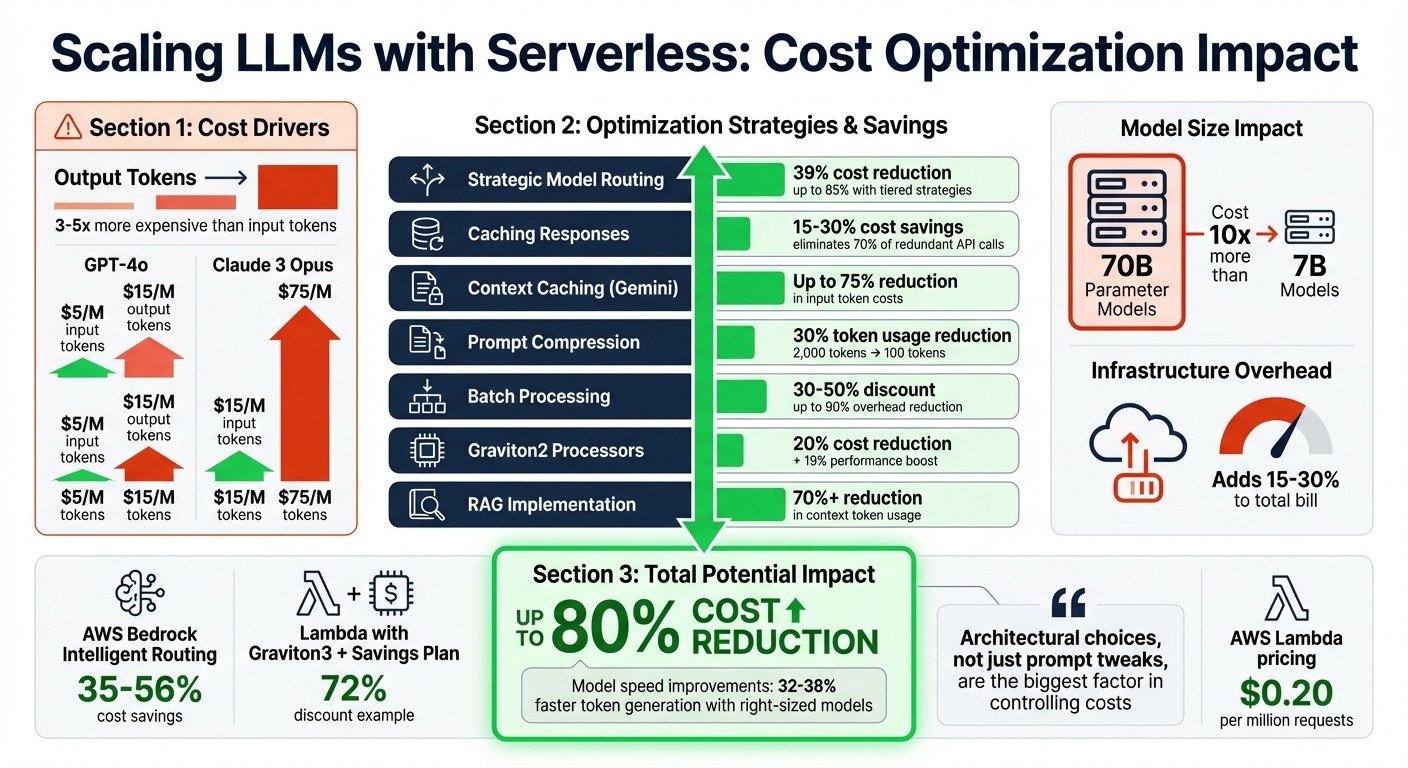

Practical strategies to cut serverless LLM costs: model right-sizing, prompt and context optimization, caching, batching, routing, and real-time observability.

César Miguelañez

Jan 10, 2026

Scaling large language models (LLMs) with serverless architecture can save costs but comes with challenges. Here's what you need to know upfront:

Serverless Benefits: Pay only for active usage - ideal for irregular traffic. Managed APIs (like OpenAI) charge per token, while serverless GPU platforms bill by compute time.

Cost Drivers: Output tokens cost 3–5x more than input tokens. Infrastructure fees, like memory allocation and failed requests, can add 15–30% to your bill.

Optimization Strategies:

Use smaller models for simpler tasks.

Optimize prompts to reduce token usage.

Cache responses to cut redundant processing.

Batch requests for lower overhead.

Set cost-based limits and monitor usage in real time.

Tools: Platforms like Latitude provide observability and feedback workflows to track and reduce costs while maintaining performance.

Key Insight: Architectural choices, not just prompt tweaks, are the biggest factor in controlling costs. Smart routing, caching, and monitoring can cut expenses by up to 80%. Start with small changes like prompt compression or context caching and scale optimizations as needed.

LLM Cost Optimization Strategies: Potential Savings by Method

What Drives Costs in Serverless LLM Workflows

If you're looking to manage costs in serverless LLM workflows, the first step is understanding where your money is going. The main drivers of these costs are token usage, the infrastructure powering the workflows, and the ability to monitor and analyze operations. Without proper tracking, these factors can quickly spiral out of control. Let’s break it down further.

Token Usage and Model Size

Token consumption is the biggest factor affecting costs in LLM workflows. Two key elements impact token costs: how tokens are used and the size of the model. It’s worth noting that output tokens are significantly more expensive than input tokens - 3 to 5 times more costly, to be exact. For instance, GPT-4o charges $5.00 per million input tokens but $15.00 per million output tokens. Similarly, Claude 3 Opus charges $15.00 per million input tokens and a hefty $75.00 per million output tokens.

The size of the model also plays a critical role. Larger models, like a 70-billion parameter model, can cost 10 times more to operate compared to smaller models, such as a 7-billion parameter one. For example, GPT-4 Turbo is nearly 50 times more expensive than GPT-3.5 Turbo. Multimodal models, which process images, audio, or video alongside text, require even more computational resources, driving costs higher than text-only models.

Prompting strategies can also influence costs. Few-shot prompting, while effective, increases token usage. On the other hand, fine-tuning a model - despite its upfront costs - can reduce token consumption by enabling shorter, more efficient prompts. However, fine-tuned models often incur ongoing hosting fees, even when they’re not actively being used.

Infrastructure Costs in Serverless Platforms

Beyond token usage, the infrastructure itself contributes significantly to costs. Serverless platforms like AWS Lambda charge based on factors such as the number of requests, execution time, and memory allocation. For example, in AWS Lambda, CPU power scales with memory allocation, meaning that assigning more memory also increases CPU costs - even if you don’t fully utilize those resources.

Cold starts can also add to expenses. To avoid latency, you can use Provisioned Concurrency to keep functions warm, but this comes with a fixed hourly fee, regardless of actual usage. For LLM workloads, additional ephemeral storage beyond 512 MB costs $0.0000000309 per GB-second, while data retention is priced at $0.15 per GB-month.

Switching to Arm-based Graviton2 processors can save money and improve performance. These processors offer a 19% performance boost and a 20% cost reduction compared to x86 alternatives. For companies handling millions of inferences monthly, these savings add up. For example, a logistics company processing 7.44 million telemetry messages per month with a 512 MB machine learning model in Lambda would spend $249.49 per month on compute and requests alone. Having accurate cost data is essential, and observability tools provide the insights needed to avoid overspending.

Why Observability Matters for Cost Control

Without proper monitoring, managing costs becomes guesswork. Observability tools allow you to track key metrics like request volume, token usage, and resource consumption in real time. Even small adjustments to parameters like temperature or top_p can significantly impact response complexity and, consequently, the cost per request. Without visibility, these effects are hard to predict or control.

"It's vital to keep track of how often LLMs are being used for usage and cost tracking." - Ishan Jain, Grafana

A lack of observability can lead to unnecessary expenses. For example, tools like AWS Compute Optimizer use machine learning to analyze past usage patterns and recommend optimal memory configurations, helping to reduce waste. Observability also helps catch costly errors, such as recursive loops where a Lambda function writes to an S3 bucket that triggers it, creating an infinite loop. Some providers even charge for failed requests - such as 400 errors caused by content policy violations - making error monitoring a critical part of cost control.

Design Patterns for Cost-Efficient Serverless LLMs

Cutting costs while maintaining quality in serverless large language model (LLM) operations requires strategic design. The focus should be on smarter architecture - deciding which model handles specific tasks, optimizing prompts, and managing repeated requests more effectively.

Model Right-Sizing and Request Routing

One of the most effective ways to save on costs is by avoiding the use of high-cost models for tasks that smaller, more efficient models can handle. Instead of defaulting to a premium model like GPT-4o for every query, identify the smallest model capable of confidently delivering accurate results. This approach, often called model right-sizing, can dramatically lower expenses.

A tiered model strategy is particularly useful. For instance, simpler queries can be routed to cost-effective models like GPT-4o Mini (approximately $0.15 per million input tokens), while reserving premium models such as GPT-4o (around $5.00 per million input tokens) for complex reasoning tasks. This cascading setup can slash costs by as much as 85% while improving accuracy by 1.5%. Dynamic request routing, which uses a lightweight router model to classify requests based on complexity, can further reduce LLM usage by 37–46% and cut AI processing costs by 39%.

Services like Amazon Bedrock's Intelligent Prompt Routing take this concept a step further by automatically directing requests within a model family. This can reduce costs by up to 30% without compromising accuracy. Beyond savings, smaller models also deliver faster results, with token generation speeds up to 32–38% quicker for simpler tasks. For example, GPT-3.5 can generate tokens up to four times faster than GPT-4.

Prompt Optimization and Context Management

Token usage is a major cost driver, so crafting efficient prompts can lead to substantial savings. Tools that compress prompts - reducing a 2,000-token instruction set to just 100 tokens - can cut token usage by up to 30% without losing clarity.

Context caching is another game-changer. By storing frequently used prefixes, such as system instructions or large document sets, you can avoid reprocessing the same tokens repeatedly. Gemini's context caching, for example, can lower input token processing costs by up to 75%. In chat-based applications, summarizing conversation history every 10–15 exchanges helps keep the context window under 1,000 tokens, preventing unnecessary expenses.

For knowledge-heavy tasks, Retrieval-Augmented Generation (RAG) is indispensable. Instead of sending entire documents to the model, a vector database retrieves and transmits only the most relevant 200–500 token chunks, reducing context-related token usage by over 70%. Since output tokens are often 3–5 times more expensive than input tokens, it's crucial to set hard limits using the max_tokens parameter and adjust temperature settings to generate concise responses.

"Most LLM cost and latency problems are architectural, not prompt-related." – Alex Buzunov, GenAI Specialist

In addition to optimizing prompts, advanced LLM tools for caching and batching can further minimize processing overhead.

Caching, Batching, and Streaming Responses

Semantic caching, which uses vector embeddings to identify similar queries, is a powerful cost-saving tool. For example, if one user asks, "How to reset password", and another asks, "Password recovery process", the system can recognize the similarity and deliver the same cached response. This method can achieve cost reductions of 15–30%. Utilizing Lambda's /tmp for warmed environments or external stores like DynamoDB to share responses can further cut redundant token processing.

Batching requests is another effective strategy. By consolidating multiple requests into a single API call, you can spread out fixed overhead costs. For non-urgent tasks, batching can reduce overhead expenses by up to 90%. For text generation, batching 10–50 requests at a time works well, while for classification tasks, 100–500 requests may be ideal.

Streaming responses is also worth considering. APIs like InvokeModelWithResponseStream process text in chunks as it is generated, reducing perceived latency and allowing partial responses if a timeout occurs. Early stopping logic - halting token generation once a satisfactory response is reached - can cut output token usage by 20–40%. When paired with AWS Step Functions to check for cached responses in DynamoDB before invoking an LLM, you can avoid unnecessary compute costs altogether.

Cost Management for Specific Serverless Platforms

Each serverless platform comes with its own billing structure and cost considerations. Building on the earlier discussion of cost drivers and design patterns, let’s dive into strategies tailored for specific platforms.

AWS Lambda and Amazon Bedrock

AWS Lambda charges $0.20 per million requests, plus a duration fee based on memory allocation - roughly $0.0000166667 per GB-second for x86 in the US East region. To optimize costs, it's crucial to right-size memory allocation. Since Lambda ties CPU power directly to memory (e.g., 1,769 MB equals one vCPU), increasing memory for CPU-intensive tasks can reduce execution time and, in turn, lower costs.

For workloads with high request volumes, Lambda Managed Instances offer a cost-effective alternative by using EC2 pricing models. For instance, in January 2026, a service processing 100 million requests per month (average duration: 200ms) utilized Lambda Managed Instances on m7g.xlarge (Graviton3). By applying a 3-year Compute Savings Plan (72% discount), monthly costs were reduced to $160.36. This included $20 in request charges, $91.40 for EC2 instances, and $48.96 in management fees.

Amazon Bedrock introduces Intelligent Prompt Routing, which optimizes costs and response times by directing requests within a model family. Internal AWS testing in January 2026 showed that routing within the Amazon Nova model family resulted in 35% cost savings and a 9.98% latency improvement. Similarly, routing within the Anthropic family yielded 56% cost savings and a 6.15% latency benefit. Additionally, the open-source AWS Lambda Power Tuning tool can help determine the ideal memory allocation to balance cost and execution time.

Serverless GPU Inference Platforms

Optimizing GPU-based platforms requires a different approach compared to traditional CPU-focused environments. The choice of inference type depends on traffic patterns:

Real-time inference: Ideal for low-latency, predictable traffic; costs are based on instance hours.

Serverless inference: Best for spiky traffic; charges are based on request duration.

Asynchronous inference: Suitable for large payloads (up to 1GB) and can scale to zero during idle periods.

To maximize efficiency, consider using multi-model endpoints and aim for high target utilization for steady workloads. These strategies improve hardware usage and reduce idle costs. For consistent workloads, configuring maximum concurrency (up to 64 per vCPU for managed instances) ensures a balance between resource use and throughput while avoiding throttling or scaling delays.

Setting Cost-Based Limits and Guardrails

As with any cost management strategy, maintaining continuous observability is key. Cost controls can prevent unexpected spikes in expenses. For example, using custom tags (like tenantId) and inference profiles allows tracking costs by user or tenant. AWS supports up to 1,000 inference profiles per region, enabling precise billing and budget caps. Circuit breakers that monitor CloudWatch metrics, such as InputTokenCount, can automatically disable access for users who exceed financial thresholds.

Amazon Bedrock Guardrails, which cost $0.75 per 1,000 text units for content filters and $0.10 per 1,000 text units for PII filters, help enforce cost controls. Input length constraints, using regex or static filters, can strip out Unicode tricks and enforce character limits, preventing exploits that inflate compute time. Activating Cost Allocation Tags in the billing console also enables tenant-specific data visibility in Cost Explorer. Setting automated budgets ensures you’re notified before spending exceeds predefined limits.

Using Observability and Feedback for Continuous Optimization

Tracking Cost and Quality Metrics

Observability tools transform unexpected monthly cost spikes into actionable, real-time insights. Instead of waiting for end-of-month reports, you can monitor expenses at a granular level - tracking costs by model, prompt, workspace, or even individual users. This level of detail helps pinpoint "cost leaks", such as uncompressed long contexts, aggressive retry patterns, unbounded recursive tool usage in agents, or silent model version upgrades.

By linking financial data to quality metrics - like cost per request, token efficiency (tokens used per accurate output), and cache hit rates - you can better understand your return on investment (ROI). For example, caching responses to frequently asked questions can slash costs by 30% to 90%. Platforms like Latitude offer built-in observability tools that let teams monitor large language model (LLM) behavior in real time, gather structured feedback from domain experts, and run evaluations to track both quality and cost over time. This approach ensures you’re not just cutting expenses but making smarter spending decisions. These insights pave the way for a more collaborative and informed financial strategy.

FinOps Principles for Serverless LLMs

Financial operations (FinOps) fosters shared accountability among IT, finance, and AI development teams. Instead of letting costs quietly accumulate, FinOps provides a framework where every dollar is tied to a specific project, team, or feature. This prevents unmonitored usage, such as when API keys are reused without clear ownership.

Set up tiered alerts to catch budget overruns early. For example, you can configure notifications in Slack at 60% of your budget (to notify owners), 85% (to escalate to the on-call team), and 100% (to enforce hard caps and disable non-essential workflows). Use cost allocation tags - like team, project, env, and model_tier - on every request to enable precise tracking in your billing dashboard. If your provider doesn’t support flexible tagging, consider using an LLM proxy between your application and the API to automatically add metadata for accurate cost attribution. With these financial controls in place, you can focus on refining workflows for sustained efficiency.

Iterative Workflow Refinement

Continuous optimization connects observability, financial controls, and actionable improvements into a seamless cycle. Start by implementing multi-level tracking: log request counts, monitor token usage, and link costs to specific sessions or experiments. Since every word translates into tokens, using concise phrasing can help reduce expenses.

Major cost and latency improvements come from architectural changes rather than endless prompt adjustments. Use observability data to refine task routing and model selection continuously. Optimize caching strategies and context management based on performance metrics. A/B test changes and scale them only if quality remains consistent during trials. This methodical approach ensures each optimization delivers measurable benefits while maintaining a seamless user experience.

Conclusion

Running large language models (LLMs) serverlessly doesn’t have to drain your budget - careful planning makes all the difference. As highlighted earlier, the key to managing costs and improving latency lies in smart architectural choices. The real savings come from strategies like efficient routing, appropriately sized resources, and constant monitoring - not just tweaking prompts endlessly.

For instance, strategic model routing can cut AI processing costs by 39%, while tiered strategies can reduce expenses by as much as 85%. Caching alone can lower costs by 15–30% and eliminate up to 70% of redundant API calls. Pair these with serverless optimizations like Graviton2 processors, which offer 20% lower costs, and batch processing discounts ranging from 30–50%, and the savings can add up fast. Altogether, these optimizations can slash LLM costs by up to 80% without compromising performance.

The real challenge isn’t discovering these techniques - it’s applying them consistently. Tools like Latitude simplify this process by offering built-in observability and collaborative prompt engineering features. These tools allow you to track expenses by model, prompt, or user, helping teams refine features before they hit production. This aligns with earlier insights on how serverless strategies can provide predictable cost control.

As usage grows, maintaining these savings requires ongoing vigilance. Inference costs rise linearly with token throughput, and even a well-optimized system can become costly without regular updates. To sustain efficiency, adopt continuous cost-monitoring practices. Use tiered alerts, apply cost allocation tags, and treat optimization as an ongoing process. Even with potential savings of up to 80%, keeping costs in check demands consistent measurement and fine-tuning as your application evolves.

Start small - implement a proven strategy like prompt routing or response caching. Measure the results, refine the approach, and scale up. By combining serverless flexibility, efficient architecture, and collaborative tools, you can build LLM deployments that are both scalable and cost-effective.

FAQs

What are the best ways to manage token costs when scaling large language models?

To keep token costs under control, start by refining your prompts. Aim for concise and well-structured prompts - for example, combine multiple fields into a single JSON request. Leverage retrieval-augmented generation to cut back on unnecessary token usage, and cache frequently used outputs to avoid repeating the same processes.

You can also explore parameter-efficient fine-tuning, which helps lower resource consumption while still delivering strong performance. By adopting modular prompt engineering and reusing outputs wherever possible, you can trim expenses without sacrificing functionality or scalability.

How can I manage costs when scaling LLMs with serverless infrastructure?

To keep costs under control while scaling LLMs with serverless infrastructure, start by optimizing your compute resources. Select the smallest memory allocation that still meets your performance needs, and explore ARM-based processors to save on costs and execution time. For workloads with unpredictable spikes, serverless inference is a better option than always-on endpoints, as it lets you pay only for the time each request takes. You can also cut down on cold-start delays by caching model artifacts and setting up workflows around event-driven triggers, so resources are activated only when necessary.

Keeping an eye on operations is just as important. Tools like Prometheus or CloudWatch can help you monitor latency, throughput, and memory usage while setting budget alerts to avoid surprises. Reduce unnecessary logging by sticking to essential logs and shortening retention periods to save on storage. For tasks like batch processing, using asynchronous patterns can help balance demand and prevent resource overload. If collaboration is part of your workflow, Latitude’s open-source platform can assist your team in refining prompt engineering and deployment settings, ensuring your LLM service runs efficiently without overspending.

Why is it important to monitor costs when scaling LLMs with serverless frameworks?

Keeping an eye on costs is a must when scaling LLMs with serverless frameworks. It gives real-time visibility into resource usage, helping teams pinpoint which models, prompts, users, or workflows are consuming the most tokens.

With this kind of detailed tracking, teams can spot spending trends and tackle inefficiencies head-on. This not only helps avoid surprise cost spikes but also ensures budgets stay under control without compromising performance.