Explore key qualitative metrics for evaluating AI prompts, focusing on clarity, relevance, and coherence to enhance user experience.

César Miguelañez

Feb 11, 2025

When evaluating prompts for AI models, qualitative metrics focus on clarity, relevance, and coherence - key factors that ensure better user experience and task alignment. Unlike quantitative methods, which measure technical aspects like speed or token usage, qualitative metrics assess how well prompts meet human expectations. Here's a quick overview:

Clarity: Clear instructions improve compliance by 87%.

Relevance: Ensures outputs match the task's intent using semantic similarity tools.

Coherence: Logical, consistent responses increase user satisfaction by 32%.

Quick Comparison

Metric | What It Measures | Impact on Performance |

|---|---|---|

Clarity | Clear, actionable tasks | Reduces follow-up questions by 38% |

Relevance | Task alignment | Boosts semantic match accuracy |

Coherence | Logical flow, consistency | Improves user satisfaction by 32% |

Combining human reviews with AI tools ensures better evaluations, reducing bias and improving prompt quality by up to 40%. Platforms like Latitude streamline this process, offering tools for version control, inline commenting, and automated checks. Want better prompts? Focus on these three metrics and use a hybrid evaluation approach.

Core Qualitative Metrics

Three key metrics are used to evaluate the quality of prompts:

Measuring Prompt Clarity

Clarity in prompts is all about providing clear, direct instructions. Research highlights that prompts with numbered steps lead to 87% better compliance compared to vague instructions. The main elements of clarity include well-defined tasks, clear boundaries, and specific output formatting.

Here's a breakdown of how clarity impacts performance:

Component | Evaluation Criteria | Impact on Performance |

|---|---|---|

Task Definition | Clear and actionable tasks | Reduces follow-up questions by 38% |

Scope Constraints | Defined time or topic limits | Boosts relevance by 42% |

Format Requirements | Clear output expectations | Increases compliance by 87% |

Clear prompts create a strong foundation for staying on track with task goals.

Testing Prompt Relevance

Relevance testing ensures the output aligns with the intended objectives. Teams often use OpenAI's text-embedding-3-small model to measure semantic similarity, providing a quantitative way to evaluate how well the response matches the task while keeping qualitative oversight intact.

Analyzing Prompt Coherence

Coherence ensures the response flows logically and maintains consistency. Data shows coherence influences 32% of user satisfaction, compared to 21% for clarity.

For example, a healthcare provider used a detailed coherence evaluation system combining expert reviews and GPT-4 scoring. This resulted in a 54% drop in clinical guideline misinterpretations. Their framework included:

Coherence Aspect | Measurement Method | Success Indicator |

|---|---|---|

Logical Flow | Alignment between input and output | Improved cross-reference accuracy |

Terminology | Consistent term usage | Higher precision in word choice |

Detail Hierarchy | Structured information flow | Better information organization |

In fields where precision is critical, structured coherence checks are essential. Tools like DeepEval's open-source framework help teams maintain high-quality outputs while systematically evaluating prompt performance.

Evaluation Methods and Tools

Assessing prompts effectively today often involves combining human expertise with AI-driven tools for a balanced and thorough quality check.

Expert Review vs. AI-Assisted Testing

Both expert reviews and AI-assisted testing have their strengths when it comes to evaluating prompts. Stanford NLP studies reveal that while human reviewers are better at noticing subtle contextual details, their evaluations can vary by up to 40% between individuals.

Evaluation Method | Strengths | Limitations | Best Use Cases |

|---|---|---|---|

Expert Review | Deep understanding of context, cultural awareness | Prone to subjective bias, time-consuming | Complex prompts, critical applications |

AI-Assisted Testing | Consistent results, fast processing, scalable | May overlook nuanced details | Large-scale evaluations, standardized checks |

Hybrid Approach | 92% accuracy, cost-efficient | Requires initial setup | Production environments |

For instance, a financial services company paired expert panels with automated tools, achieving 45% faster development cycles and boosting coherence scores from 3.2 to 4.7/5. This demonstrates the effectiveness of a combined approach:

"The combination of human expertise and AI-powered evaluation tools has revolutionized our prompt development process. We've seen a 68% reduction in hallucination rates while maintaining high-quality outputs", shared a senior prompt engineer involved in the project.

This hybrid model is increasingly being implemented through specialized platforms, enabling teams to focus on core metrics such as clarity and coherence.

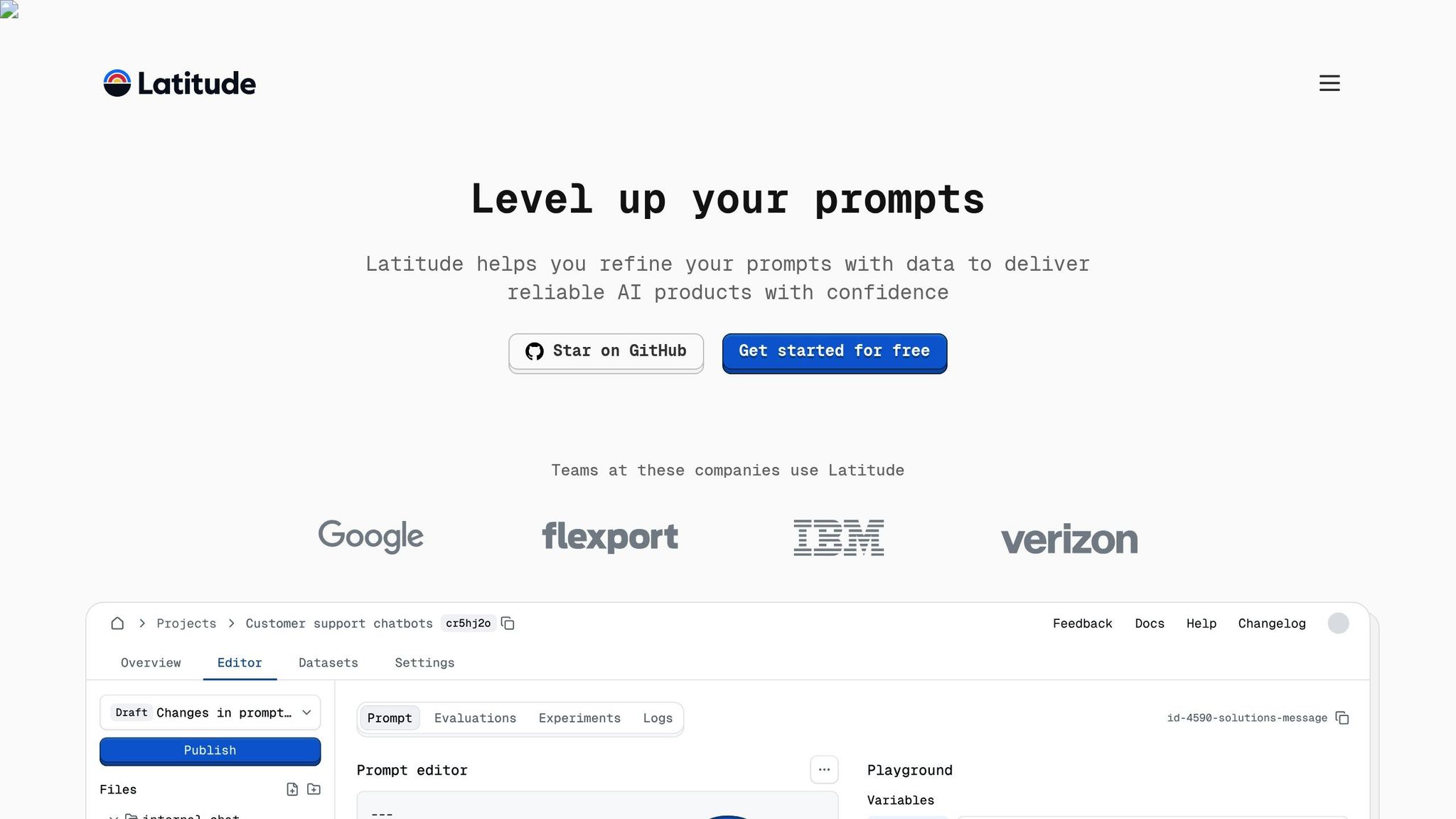

Latitude Platform Overview

Latitude's open-source platform is designed to streamline collaborative prompt engineering, addressing challenges with tools that prioritize three main metrics: clarity, relevance, and coherence.

The platform includes an evaluation dashboard that tracks performance across different prompt versions:

Feature | Function | Impact |

|---|---|---|

Version Control | Tracks iteration history | Ensures clear audit trails |

Inline Commenting | Allows expert annotations | Enhances context retention |

Shared Library | Includes 1500+ validated prompts | Speeds up development |

Automated Bias Detection | Uses consensus modeling | Flags 83% of biased evaluations |

Organizations using Latitude report a 40% improvement in prompt quality. These results are supported by systematic methods such as:

Standardized 5-point rating rubrics

Blind review protocols

Statistical normalization

AI-driven anomaly detection

Common Issues and Solutions

Evaluation tools are great for assessing prompts, but they often come with their own set of challenges. Tackling these issues requires targeted strategies.

Reducing Subjective Bias

Subjective bias can disrupt assessments, especially when it comes to clarity and coherence - two metrics that rely heavily on human judgment. Research shows that individual reviewers can vary in their assessments by as much as 40%. To address this, Microsoft's Azure ML framework introduced a structured scoring system with clearly defined levels.

Bias Type | Solution | Results |

|---|---|---|

Domain Expertise Bias | Cross-functional review panels | 40% fewer bias-related flags |

Writing Style Preference | Standardized rubrics with examples | 35% increase in reviewer agreement |

Contextual Interpretation | AI-assisted pre-scoring | 60% faster review times |

By combining human expertise with AI tools, organizations have managed to achieve 98% accuracy while cutting down evaluation time significantly.

Standardizing Review Process

Consistency is key when applying metrics like clarity, relevance, and coherence. Standardization ensures every team evaluates prompts the same way, improving both fairness and efficiency.

Three critical components for standardization:

Calibration Protocols: Regular sessions using gold-standard examples to align reviewers.

Metric Definition Framework: Clearly defined metrics that reduce conflicts by 72%.

Performance Monitoring: Tools like Portkey's system track deviations, achieving 92% consistency.

Process Component | Implementation Method | Success Metric |

|---|---|---|

Evaluator Training | Weekly calibration workshops | 35% better reviewer agreement |

Scoring Guidelines | Version-controlled documentation | 50% faster conflict resolution |

Quality Control | AI-driven consistency checks | 92% alignment among evaluators |

Organizations that adopt these methods report noticeable gains in both the quality and speed of their evaluations.

Summary and Next Steps

Main Points

Modern evaluation frameworks now focus on clarity, relevance, and coherence, leading to measurable improvements in performance. For instance, qualitative prompt evaluation has transitioned from subjective reviews to structured methods, with studies reporting a 30% boost in task performance when prompts are optimized.

Evaluation Component | Impact | Efficiency Gain |

|---|---|---|

Standardized Frameworks | Greater consistency | 28% improvement in bias detection |

Future Developments

New advancements are enhancing hybrid evaluation methods, introducing tools and practices that improve collaboration and maintain high standards. Latitude's platform is a prime example, allowing domain experts and engineers to work together efficiently while upholding production-level quality.

Here are three key trends shaping the future of qualitative prompt evaluation:

AI Explanation Systems

These systems are tackling current challenges in bias detection by using advanced decision-mapping techniques. This has led to a 28% improvement in identifying biases. Organizations can now better understand the reasoning behind AI responses.

Integrated Evaluation Frameworks

Multimodal approaches are emerging, combining qualitative insights with quantitative data. This integration ensures that evaluations remain efficient while capturing a more complete picture.

Collaborative Assessment Platforms

New platforms are enabling real-time collaboration between experts and engineers. This teamwork strengthens evaluation processes, making them more reliable and effective.

Looking ahead, evaluation tools will aim to combine human expertise with technological precision, ensuring thorough and efficient assessments.

FAQs

Here are answers to common questions about tackling challenges related to the core metrics:

How can you measure how effective a prompt is?

Effectiveness can be gauged by combining automated tools with expert reviews. Start by defining clear objectives and use iterative testing to refine prompts. This approach ties in with the structured checks outlined in Evaluation Methods.

For example, a fintech company increased its FAQ accuracy from 72% to 89% by conducting weekly reviews and tracking performance data.

What are the key metrics for evaluating prompt effectiveness?

Evaluating prompts involves five key qualitative metrics, which help create a clear assessment framework:

Metric | Purpose | Target |

|---|---|---|

Relevance | Matches query intent | Semantic similarity > 85% |

Coherence | Checks logical flow | 4+ on a 5-point scale |

Task Alignment | Ensures instruction is met | > 90% format adherence |

Bias Score | Detects demographic bias | < 5% bias presence |

Consistency | Handles edge cases well | 85% consistency rate |

How do you assess the quality of a prompt?

Evaluating quality involves combining automated tools with expert reviews. Studies show that using standardized rubrics can reduce scoring inconsistencies by 40%. To maintain quality over time, use version tracking and automated regression testing to spot and address issues.