Guide to measuring LLM instruction-following: key metrics (DRFR, Utility Rate, Meeseeks), benchmarks (IFEval, AdvancedIF, WildIFEval), and evaluation workflows.

César Miguelañez

Jan 26, 2026

Large Language Models (LLMs) need to follow instructions accurately to perform tasks reliably. This involves understanding user intent, meeting specific content requirements, and adhering to formatting rules. However, many models fail to meet these expectations consistently, with some achieving less than 50% compliance on benchmarks.

Here’s what you need to know:

Key Metrics: Metrics like Utility Rate, DRFR, and Meeseeks Score evaluate how well LLMs follow instructions. Each has strengths for different tasks, from simple outputs to complex, multi-step prompts.

Benchmarks: Tools like IFEval, AdvancedIF, and WildIFEval test LLMs on various instructions, including multi-turn conversations and nuanced formatting.

Evaluation Process: Combine rule-based checks, human feedback, and iterative improvements to refine a model’s performance.

Why it matters: Instruction-following is critical for deploying LLMs in areas like customer support, content creation, and professional applications. By measuring and improving this capability, you can ensure dependable outputs and better user experiences.

Metrics for Evaluating Instruction-Following

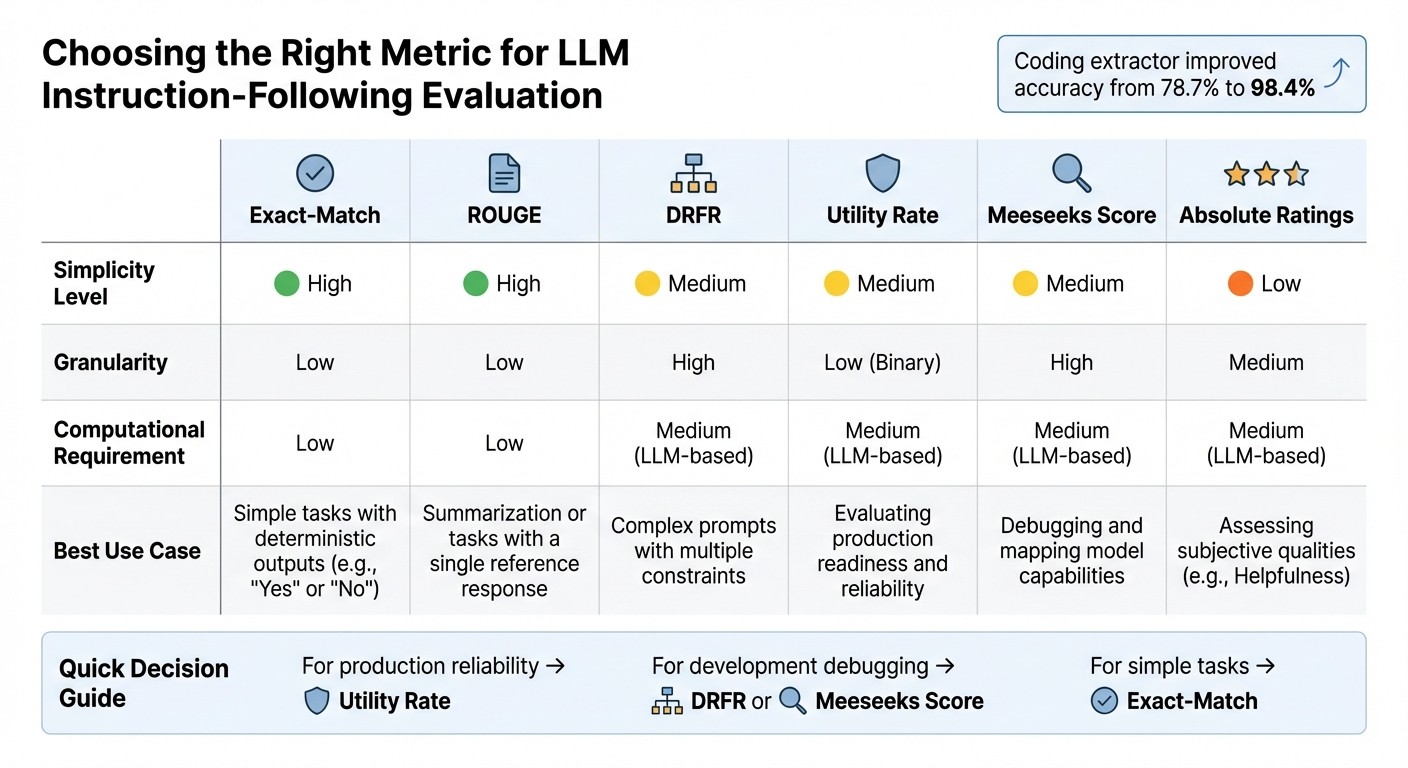

LLM Instruction-Following Metrics Comparison: Simplicity, Granularity, and Best Use Cases

Common Metrics Overview

Choosing the right metric is essential when evaluating how well large language models (LLMs) follow instructions. Traditional metrics like Exact-Match and ROUGE focus on matching strings or n-grams between generated and reference text. While these work for straightforward tasks, they struggle with open-ended scenarios where multiple valid responses exist.

Modern benchmarks have introduced more nuanced metrics. For instance, Decomposed Requirements Following Ratio (DRFR) breaks down multi-constraint instructions into simpler components and calculates the percentage of requirements the model meets successfully. Utility Rate takes a stricter approach - it measures the share of responses that meet all requirements in a prompt, making it ideal for assessing outputs intended for production. The Meeseeks Score, also known as Average Criteria Score, offers a balance by averaging scores across specific tags, capturing partial successes and highlighting areas for improvement.

For subjective evaluations, Absolute Ratings frameworks like HELM Instruct use a scoring system (typically 1–5) to assess dimensions such as Helpfulness, Completeness, Conciseness, and Understandability. Additionally, rule-verifiable metrics - used in benchmarks like IFEval - check for adherence to specific constraints, such as word count or the inclusion of required keywords.

"DRFR breaks down complex instructions into simpler criteria, facilitating a detailed analysis of LLMs' compliance with various aspects of tasks." - Yiwei Qin, InFoBench

Metric Comparison

Each metric serves a distinct purpose. While Exact-Match and ROUGE are straightforward and fast, they don’t capture semantic understanding. On the other hand, DRFR and Meeseeks Score provide detailed insights, making them ideal for diagnosing model weaknesses during development. Utility Rate focuses on whether a response is ready for real-world use.

Metric | Simplicity | Granularity | Computational Requirement | Best Use Case |

|---|---|---|---|---|

Exact-Match | High | Low | Low | Simple tasks with deterministic outputs (e.g., "Yes" or "No") |

ROUGE | High | Low | Low | Summarization or tasks with a single reference response |

DRFR | Medium | High | Medium (LLM-based) | Complex prompts with multiple constraints |

Utility Rate | Medium | Low (Binary) | Medium (LLM-based) | Evaluating production readiness and reliability |

Meeseeks Score | Medium | High | Medium (LLM-based) | Debugging and mapping model capabilities |

Absolute Ratings | Low | Medium | Medium (LLM-based) | Assessing subjective qualities (e.g., Helpfulness) |

This table underscores the strengths and trade-offs of each metric. Notably, using a "coding extractor" - where an LLM generates code to extract relevant parts of a response - has significantly improved evaluation accuracy. In fact, this method increased accuracy from 78.7% to 98.4% compared to standard extraction techniques.

How to Choose the Right Metric

Selecting the best metric depends on your goals. For tasks where reliability is paramount - such as deploying an LLM in a professional setting - Utility Rate is invaluable. It ensures you know the percentage of outputs that fully meet all requirements.

During development, DRFR and Meeseeks Score are excellent for identifying specific areas of improvement, such as formatting or adherence to word limits. For multi-turn interactions, iterative feedback can significantly improve Utility Rate - by as much as 50 percentage points.

For simpler, deterministic tasks, Exact-Match is sufficient. However, for more complex, multi-step instructions or open-ended creative tasks, rubric-based metrics aligned with expert judgment offer deeper insights. It’s worth noting that even the most advanced models, like GPT-5 and Grok-4, achieve only 74% to 77.9% on benchmarks like AdvancedIF. This highlights the ongoing need for improvement in instruction-following capabilities.

Benchmarks and Datasets for Instruction-Following

Common Benchmarks

The benchmarks you select depend on your testing objectives, such as those found on the ultimate LLM leaderboard. IFEval (Instruction-Following Eval) zeroes in on "verifiable instructions" - criteria that can be objectively measured, like word counts or specific keyword requirements. Google Research offers 25 types of verifiable instructions across roughly 500 prompts. This makes IFEval a solid choice when you need reproducible results without the risk of evaluator bias.

InFoBench takes a different approach by breaking instructions into 2,250 decomposed questions. This allows you to calculate the Decomposed Requirements Following Ratio (DRFR). It’s a great tool for pinpointing exactly where your model excels or struggles with multi-constraint instructions.

For a more advanced evaluation, AdvancedIF sets a high bar. Introduced in November 2025 by researchers from Meta Superintelligence Labs, Princeton University, and CMU, it features over 1,600 expert-crafted prompts. These prompts focus on three challenging areas: complex instructions (with six or more requirements per prompt), multi-turn conversations where context carries over, and system prompt steerability. Its complexity makes it invaluable for testing advanced model capabilities.

"AdvancedIF is the only [benchmark] whose prompts and rubrics are manually created by human experts and has dialogs with multi-turn conversation and system prompts." - Yun He et al., Meta Superintelligence Labs

LLMBar serves a unique role as a meta-evaluation benchmark. It tests whether a language model can reliably act as a judge. With 419 instances pairing instructions with one correct and one flawed output, it includes adversarial subsets to challenge the model's ability to discern quality. This is especially useful when using LLMs to evaluate other LLMs.

For iterative, multi-turn evaluations, Meeseeks simulates human-LLM interactions. Created by Jiaming Wang from Meituan in early 2025, it evaluates models using 38 capability tags across three dimensions: Intent Recognition, Granular Content Validation, and Output Structure Validation. For example, OpenAI's o3-mini (high) model improved its utility rate from 58.3% in the first round to 78.1% by the third round through self-correction.

These benchmarks provide a strong foundation for robust evaluation. Let’s dive into the datasets that complement them.

Evaluation Datasets

Evaluation datasets build on these benchmarks by addressing both measurable and nuanced constraints. The choice often comes down to verifiable versus soft constraints. IFEval focuses on objective rules, while WildIFEval captures real-world user interactions. As the largest dataset of its kind, WildIFEval includes 77,000 user instructions and 24,731 unique constraints, covering "soft" criteria like style, tone, and quality that rule-based systems struggle to evaluate.

"WildIFEval is uniquely representative of natural user interactions at scale; it stands out as the largest available dataset, consisting of real‐world user instructions given to LLMs." - Gili Lior, The Hebrew University of Jerusalem

Another key distinction is between single-turn and multi-turn evaluations. Traditional benchmarks like IFEval and InFoBench focus on one-shot responses, but real-world applications often involve ongoing conversations. AdvancedIF includes a multi-turn category where, for example, a model might be tasked with recommending 5–8 hikes, providing 1–3 brief paragraphs for each, and sorting them by completion time in a specific "Xhrs Xmins" format - all while maintaining context across turns.

WildIFEval organizes constraints into eight categories: Include/Avoid, Editing, Ensure Quality, Length, Format and Structure, Focus/Emphasis, Persona and Role, and Style and Tone. This classification helps identify where your model performs well and where it needs improvement. For niche areas like healthcare or creative writing, WildIFEval’s diverse dataset fills gaps that synthetic datasets often miss.

Your dataset choice should align with your deployment needs. Use IFEval for standardized and reproducible benchmarking. Opt for WildIFEval to test against the "long tail" of diverse user inputs. AdvancedIF is best for evaluating complex, high-difficulty scenarios with interdependent instructions. And if you’re testing how well your model self-corrects after feedback, Meeseeks offers a practical solution - potentially boosting utility rates by up to 50 percentage points across three rounds.

How to Evaluate Instruction-Following: Step-by-Step

Step 1: Select Benchmarks and Metrics

Start by identifying the right benchmarks and metrics for your evaluation. For tasks with clear, measurable outcomes - like word counts or formatting rules - use verifiable metrics to ensure consistency and avoid evaluator bias. On the other hand, for open-ended tasks, such as crafting marketing copy with specific tonal nuances, a rubric-based evaluation is more suitable. These often require either human reviewers or an LLM judge to assess the quality.

Your choice here is critical - it lays the groundwork for generating accurate responses and conducting meaningful analysis. For example, a customer service chatbot might need strict adherence to formatting rules, while creative tasks demand flexibility and nuanced judgment.

Step 2: Generate and Analyze Model Outputs

Once benchmarks are set, generate model outputs using standardized prompt templates. Before diving into the evaluation, ensure you isolate the response from any filler text. Using code-based extraction tools can significantly improve the accuracy of your assessment.

When dealing with complex prompts, break them down into smaller, verifiable criteria. For instance, if your prompt asks for "5–8 hiking recommendations with 1–3 paragraphs each, sorted by completion time", assess each requirement - like the number of recommendations, paragraph length, and sorting - separately. This granular approach helps pinpoint exactly where the model is falling short. It may excel in content creation but fail to adhere to sorting instructions, for example.

If you're using LLM judges for scoring, make sure they provide chain-of-thought reasoning. This helps clarify their decisions and ensures that errors are genuine rather than artifacts of evaluation bias. Be mindful of common biases, such as favoring longer responses (verbosity bias) or giving preference to the first option in a comparison (position bias). Regularly calibrate automated scores with expert annotations to keep evaluations reliable.

Step 3: Iterate with Feedback Loops

Use the insights from your analysis to improve performance through structured feedback. Evaluation should be an ongoing process. Set clear benchmarks, like achieving a coherence score of 4 out of 5, and track progress over multiple iterations. For tasks involving multi-turn interactions, measure the model's ability to self-correct. For example, improvements in Utility Rate from 58.3% to 78.1% have been observed through iterative feedback.

If you're managing a production system, implement real-time monitoring to catch performance dips early and address specific weaknesses. A great example comes from November 2025, when Meta Superintelligence Labs used the RIFL pipeline to enhance Llama 4 Maverick's performance on AdvancedIF by 6.7%. They achieved this by leveraging reward shaping based on rubric-based verifications. When analyzing failures, link scores to specific issues - such as unclear prompts, context length limits, or fundamental capability gaps - for targeted improvements.

Using Latitude for Instruction-Following Evaluations

Latitude provides a comprehensive workflow to evaluate and refine how well large language models (LLMs) follow instructions. By building on established evaluation methods, Latitude simplifies the process of assessing instruction-following. The platform records all interactions with prompts and models, storing them for detailed analysis. This centralized system helps identify patterns in failures and track improvements over time.

Observe and Debug LLM Behavior

The Interactive Playground allows you to experiment with prompts using a variety of inputs and get instant feedback. Before rolling out updates to production, you can test how effectively the model follows your instructions using sample inputs. Latitude's Advanced Output Parsing lets you focus on specific parts of a response, such as JSON fields or the final message in a conversation.

For more complex prompt evaluations, Latitude offers the "Faithfulness to Instructions" template, where an LLM acts as a judge to critique outputs based on your criteria. If evaluation scores are unsatisfactory, you can analyze logs to identify issues and refine prompts with AI-generated suggestions. The platform includes Live Mode for real-time monitoring of production logs and Batch Mode for testing prompts against golden datasets to spot regressions. These tools integrate seamlessly into Latitude’s evaluation suite, making debugging more efficient.

Set Up Evaluation Workflows

To begin, navigate to the Evaluations tab in Latitude and click "Add Evaluation." From there, you can choose the evaluation type that fits your task. Different approaches cater to specific needs: the LLM-as-Judge method is ideal for assessing adherence to complex instructions and tone, while Programmatic Rules work well for validating JSON schemas, regex patterns, and length constraints.

You can enable Live Evaluation for real-time tracking or use Batch Mode to test changes against golden datasets and identify regressions. Latitude also supports Composite Scores, which combine multiple metrics into a single performance summary. This structured reporting makes it easier to monitor overall quality and implement systematic improvements.

Collect Feedback for Continuous Improvement

Latitude’s Human-in-the-Loop (HITL) workflows allow for manual review and annotation of logs, capturing subtleties that automated metrics might miss. These annotated logs are crucial for creating golden datasets, which are then used to test future updates and catch edge cases.

The platform's Prompt Suggestions feature analyzes evaluation results, including human feedback and low scores, to recommend specific adjustments to your prompts. For traits you want to minimize, like instruction deviation, you can use the "Advanced configuration" to optimize for lower scores. This feedback loop transforms evaluation data into actionable improvements, helping you refine instruction-following performance over time.

Conclusion

Evaluating how well Large Language Models (LLMs) follow instructions isn't just a nice-to-have - it's a cornerstone of creating dependable AI systems. Many developers use viral LLM tools to accelerate this development cycle. Jiaming Wang from Meituan puts it perfectly:

"The ability to follow instructions accurately is fundamental for Large Language Models (LLMs) to serve as reliable agents in real‐world applications".

Without proper evaluation methods, AI features risk being deployed without any certainty of their performance.

Even the most advanced models, like GPT-4o and Claude-3.7-Sonnet, successfully meet requirements in less than half of complex tasks. But there’s a silver lining. By combining programmatic rules, LLM-as-Judge evaluations, and human feedback, you can create a more complete view of your model's performance.

Improvement hinges on ongoing monitoring. Batch evaluations with curated datasets can catch regressions before they reach production, while live monitoring identifies real-time issues as the model processes user traffic. This kind of proactive monitoring lays the groundwork for integrated evaluation systems to thrive.

Platforms like Latitude simplify this process by merging observation, evaluation, and feedback into one streamlined system. Features like composite scoring, prompt optimization based on evaluation insights, and human-in-the-loop workflows turn raw data into actionable improvements. To get the best results, start with clear, measurable instructions - specific word counts, required formatting, and key terminology - then incorporate subjective assessments for tone and creativity. Build your golden datasets from production logs and human annotations, and use them to refine prompts. Models will continue to evolve, but with a solid measurement framework, your instruction-following capabilities can stay a step ahead.

FAQs

What are the most important metrics for evaluating instruction-following in LLMs?

When evaluating how effectively large language models (LLMs) follow instructions, several key factors come into play. These include helpfulness, clarity, completeness, conciseness, and safety in their responses. Together, these elements provide a snapshot of how well a model adheres to the instructions it receives.

More sophisticated methods, often inspired by research frameworks, dive deeper with metrics like the directive-following rate and the model's ability to break down complex instructions into smaller, manageable steps. Assessments can involve human reviewers, automated scoring systems, or even evaluations conducted by other models to strike a balance between accuracy and cost-efficiency.

Ultimately, the most important aspects to assess are the model’s accuracy, consistency, relevance to the task, and its ability to self-correct while carrying out instructions. These benchmarks ensure the model delivers reliable and precise results.

What’s the difference between IFEval and AdvancedIF benchmarks for testing LLMs?

When it comes to evaluating how well large language models (LLMs) follow instructions, IFEval and AdvancedIF take different approaches, each tailored to specific aspects of performance.

IFEval focuses on straightforward, measurable tasks. It uses about 500 prompts to test basic instruction-following abilities, such as generating responses with specific word counts or including required keywords. This makes it a reliable choice for assessing simple, verifiable instructions where consistency and reproducibility are key.

In contrast, AdvancedIF dives into more complex territory. With over 1,600 prompts, it evaluates models on intricate, multi-turn interactions and system-prompted tasks. It uses a detailed rubric-based system and incorporates expert-designed criteria to assess advanced instruction-following capabilities. Additionally, AdvancedIF leverages reinforcement learning techniques to fine-tune model behavior over time, offering a broader and more nuanced evaluation.

In essence, IFEval is best for testing basic, clear-cut tasks, while AdvancedIF shines in analyzing more sophisticated and dynamic scenarios.

Why is it important to monitor LLMs to improve their ability to follow instructions?

Monitoring large language models (LLMs) is crucial to ensure they perform accurately and reliably over time. As these models are deployed in practical applications, their behavior can shift, making it essential to keep a close eye on their performance. Regular observation helps catch issues like errors, inconsistencies, or a drop in effectiveness, allowing developers to address problems quickly. This not only enhances the model's functionality but also strengthens user confidence.

Key metrics like response accuracy, latency, token usage, and error rates offer valuable insights into the model's performance across various scenarios. By analyzing this data, developers can fine-tune the model and make iterative improvements, helping it handle complex instructions more effectively. Ongoing evaluation ensures the model stays responsive to user needs while maintaining a high standard of performance.