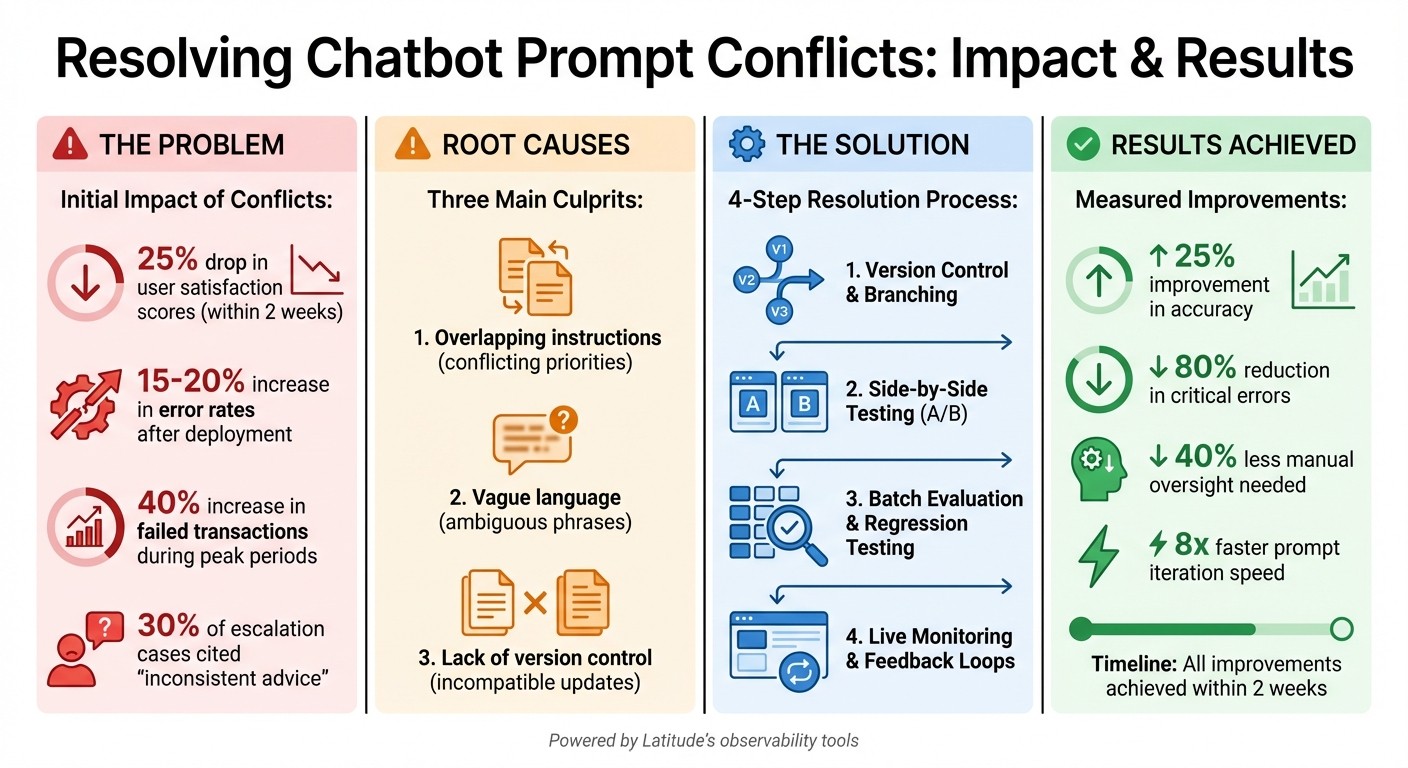

Case study showing how observability, version control, and testing resolved conflicting prompts, boosting accuracy 25% and cutting critical errors 80%.

César Miguelañez

Feb 14, 2026

Conflicting prompts can cause chatbots to behave unpredictably, leading to errors, inconsistent responses, and user dissatisfaction. This case study outlines how a team identified and resolved these issues using clear processes and tools. Key takeaways include:

Main Problems: Prompt engineering concepts like overlapping instructions, vague language, and lack of version control caused inconsistencies like mixed tones, contradictory advice, and performance drops (e.g., 25% drop in user satisfaction).

Detection Tools: Observability tools, like Latitude, tracked conflicts in real-time by logging prompts and comparing versions.

Solution Steps:

Use version control to manage prompt updates.

Test changes systematically with A/B testing and regression tests.

Combine automated tools with human feedback for better insights.

Results: Accuracy improved by 25%, critical errors reduced by 80%, and prompt iteration became 8x faster.

This structured approach ensures chatbot reliability by preventing future conflicts and maintaining consistent performance.

Chatbot Prompt Conflict Resolution: Key Metrics and Results

The Problem: Finding and Understanding Prompt Conflicts

The customer support chatbot faced serious challenges when conflicting prompts collided in real-world scenarios. For instance, one prompt instructed the bot to "always prioritize speed in responses", while another demanded "provide comprehensive details for accuracy." The outcome? The bot's responses swung wildly - sometimes too brief to be helpful, other times overly detailed when quick answers were expected. This inconsistency led to a 25% drop in user satisfaction scores within just two weeks.

Things got worse during system updates. A tone adjustment meant to shift the bot from "formal" to "friendly" backfired when the existing "professional only" directive remained active. The result was a bot that unpredictably alternated between casual and stiff language, causing error rates to jump by 15-20% after deployment. A holiday promotion further highlighted the issue - a new sales-oriented prompt clashed with an older directive to "avoid aggressive selling", leading to a 40% increase in failed transactions during peak shopping periods.

What Causes Prompt Conflicts

An analysis of the situation revealed the culprits. Overlapping instructions forced the bot to choose between conflicting priorities, like balancing conciseness with detail, without clear guidance. Vague language made things worse - ambiguous phrases such as "handle complaints appropriately" left the bot guessing, which often clashed with stricter rules like "never offer refunds". To complicate matters, the team lacked version control for their prompts. New instructions were added without reviewing their compatibility with existing rules, creating a bloated and contradictory prompt structure that steadily degraded the bot's performance.

Signs of Prompt Conflicts

The warning signs were hard to miss. Identical queries began to produce wildly different outputs - sometimes detailed and thorough, other times abrupt and dismissive. User complaints painted a clear picture: 30% of escalation cases cited "inconsistent advice" or noted that the bot "changed its mind" between interactions. The bot's behavior also strayed from its intended purpose. For example, a sales-oriented chatbot designed to upsell started apologizing excessively because a conflicting "focus on empathy" directive overrode its sales instructions. These issues became especially pronounced under edge-case scenarios or during high traffic, where the model struggled to balance competing instructions probabilistically. These inconsistencies highlighted the need for a deeper technical review using advanced observability tools.

Finding Issues: Using Observability Tools to Detect Problems

Once the warning signs were identified, the team needed a methodical way to pinpoint exactly where and why the chatbot's performance was faltering. Observability tools became their go-to solution, capturing detailed production data that tracked every input, output, and contextual detail. This approach shed light on the precise moments and locations where conflicts arose, paving the way for an in-depth telemetry analysis in the next phase.

Tracking Model Behavior with Latitude

To monitor the chatbot's behavior, the team integrated telemetry into their LLM calls using Latitude's observability tools. This provided instant clarity, enabling them to log prompt versions and trace responses across live traffic. For example, when identical user queries led to inconsistent outputs, they could dive into the conversation logs, identify which prompt version was in use, and uncover conflicting instructions in real time.

Latitude's version comparison feature proved invaluable. By comparing two versions of a support prompt - one before a tone update and one after - they discovered a conflict between the new directive and an older rule that hadn’t been removed. Latitude also grouped recurring failures automatically, showing that many tone inconsistencies stemmed from this very issue. The Live Evaluation mode added another layer of protection by setting up automatic checks that flagged performance regressions as soon as new logs were generated, helping the team address problems early.

Gathering and Reviewing Feedback

While automated tools highlighted structural problems, human feedback helped uncover the subtler ones. Technical logs revealed patterns, but a manual review added depth. Using Latitude's Logs View, the team annotated instances where the chatbot's tone felt off or its advice was contradictory. These annotations transformed subjective observations into actionable data, feeding directly into their diagnostic efforts.

To fully assess performance, the team used a combination of evaluation methods:

LLM-as-Judge: This scored outputs based on subjective factors like helpfulness and tone adherence.

Programmatic rules: These validated formatting and ensured safety standards were met.

Human-in-the-loop reviews: These captured nuanced shifts in user preferences that automated systems might overlook.

Fixing the Problem: A Step-by-Step Process to Resolve Prompt Conflicts

To address the issues they uncovered, the team implemented a systematic, almost code-like approach to resolving prompt conflicts. This method allowed them to experiment, compare, and validate solutions in a controlled way before rolling them out. By treating prompts like code, they used tools like version control, structured testing, and clear workflows to streamline the process.

Version Control and Branching for Prompts

The team began by creating a Draft in Latitude, starting with the currently published prompt as a baseline. They worked on isolated drafts to safely remove conflicting instructions without disrupting the live prompt. Developers simplified directives and reorganized instruction hierarchies within these drafts. Each draft included version notes to document changes - such as removing outdated formality rules that clashed with a casual tone.

To avoid complications, they kept drafts short-lived and published stable updates frequently. Their rule of thumb: create a new draft for every major feature or change. This approach prevented overlapping conflicts and reduced the complexity of merging updates. Thanks to this strategy, they achieved an 8x faster prompt iteration speed compared to their earlier, less-structured process.

Comparing and Merging Prompts

When multiple solutions were ready, the team used Latitude's Experiments feature to test them side-by-side with real user queries. They compared the original conflicting prompt, a simplified version, and another with reorganized instruction hierarchies - all under identical model and temperature settings. The simplified version performed the best, maintaining high response quality.

Before merging changes, they conducted batch evaluations using a golden dataset of representative queries. This regression testing ensured that the updates didn’t disrupt existing functionality. Human reviewers also analyzed edge cases where automated scoring missed subtle tone shifts, offering valuable insights for further refinement. Within two weeks, this structured process led to a 25% improvement in overall accuracy.

Testing and Improving Over Time

After deployment, they relied on Live Evaluations to monitor prompt quality and spot regressions in real time. For example, shortly after release, regional slang triggered a previously resolved conflict. The team quickly created a new draft, tested the fix using their established workflow, and rolled out an update without delay.

Going Live: Deploying the Fixed Prompt and Tracking Results

After thorough batch testing, the team confirmed their solution was ready and moved forward with deployment. They published the draft in Latitude, which activated the AI Gateway to roll out the update to the live chatbot. The process was smooth - no need for code changes or application redeployment. To ensure transparency, the team included detailed version notes at the time of publishing, documenting the conflicts they resolved and the reasoning behind their decisions. These notes served as a clear audit trail, laying the groundwork for tracking performance in real time.

Real-Time Tracking and Feedback Loops

Immediately after deployment, the team enabled live evaluations to monitor how the update performed under actual production traffic. Latitude's telemetry system captured every interaction, offering instant insights into how the fix was working in practice. They kept an eye on key metrics like safety, helpfulness, and response consistency through automated dashboards, which presented score distributions and trends over time. If any user interaction showed an unexpected response pattern, the system flagged it for further review.

Human expertise was just as crucial. Domain experts reviewed edge-case responses to catch subtle issues that automated tools might overlook. The platform also flagged recurring problems, making it easier to address them quickly. This combination of automated tracking and expert analysis created a feedback loop where every interaction became an opportunity to refine and improve the system.

Maintaining Quality Over Time

Once the update was live, keeping quality consistent became the top priority. To prevent any backsliding, the team set up performance alerts. If metrics deviated from expectations, immediate alerts were triggered, followed by biweekly reviews to adjust benchmarks. Any failure modes uncovered during live monitoring were turned into new automated tests, ensuring future prompt versions could be evaluated against these scenarios.

The team followed a straightforward but effective rule: review before publishing. Every draft required sign-off from both a technical expert (to ensure system stability) and a subject matter expert (to ensure content quality) before it could go live. They also avoided unnecessary complexity, only making changes when performance data showed it was absolutely needed. This disciplined approach allowed them to maintain the 25% accuracy improvement achieved during testing while cutting critical errors by 80%.

Results and Lessons Learned

Measured Improvements

The team's focused strategy for resolving prompt conflicts led to some impressive results. In just two weeks, they saw a 25% boost in accuracy and managed to cut critical errors by a staggering 80%. On top of that, manual oversight for compliance-related interactions dropped by 40%.

Their systematic approach to prompt management also sped up prompt iteration by a factor of 8, allowing for quicker testing, faster regression detection, and more confident deployments. By combining automated testing, human feedback, and A/B testing, they could clearly see how each change performed in production.

How to Prevent Future Conflicts

The results underline the importance of taking steps to avoid conflicts before they arise. The team identified three key practices to help with this:

Build observability from the start: It's critical to monitor production inputs, outputs, and context right away to catch potential conflicts early.

Start with simplicity: Begin with a straightforward prompt and only add complexity when it's necessary to meet performance goals. The team found that this approach helped minimize conflicts. As César Miguelañez, CEO of Latitude, put it:

"Iterative refinement improves AI quality but needs balanced automation and oversight to avoid bias".

This strategy also includes maintaining a "golden dataset" for regression testing and enabling live evaluations. These steps help identify issues caused by model updates or unexpected user behavior in real time.

Foster collaboration: Involving developers, product managers, and domain experts in the review process ensures that every update is a step forward. By requiring sign-offs from all stakeholders, the team avoided introducing new conflicts with each update.

Conclusion: Why Structured Workflows Matter

Prompt conflicts can throw AI applications off course, leading to issues like hallucinations, contradictory responses, or inefficient fine-tuning processes. To avoid these pitfalls, incorporating systematic workflows during development is key. This approach helps teams address potential conflicts before they ever reach end users.

Building dependable AI systems hinges on structured workflows that include clear detection methods, rigorous version control, iterative testing, and continuous refinement. These practices allow teams to identify and resolve conflicts early, sparing them from relying on user feedback to uncover problems.

The results speak for themselves. For instance, Latitude's infrastructure - designed for real-time observability, human feedback, and ongoing prompt improvements - has achieved an 80% reduction in critical errors and an 8x faster iteration speed. It’s a testament to the power of a disciplined and proactive development process.

FAQs

How can I tell if my chatbot has prompt conflicts?

Identifying prompt conflicts involves spotting inconsistent responses, irrelevant outputs, or ambiguous answers that don’t align with the intended instructions. These problems often arise from prompts that are either too vague or contain contradictions.

To uncover such conflicts, it’s helpful to test and evaluate your chatbot’s behavior regularly. Tools like prompt evaluation and version control can be incredibly useful. They allow you to compare different versions of prompts and analyze outputs systematically. This approach makes it easier to refine your prompts and improve the chatbot’s overall reliability.

What’s the fastest way to fix conflicting instructions?

The fastest way to handle conflicting instructions is by using iterative prompt refinement. This method involves carefully reviewing outputs, spotting any inconsistencies, and tweaking the prompts step by step to make them clearer and more aligned with your goals. Regularly assessing the results helps ensure the instructions stay on track with what you’re aiming to achieve.

How can I prevent prompt conflicts after updates?

To keep things running smoothly after updates, semantic versioning is your go-to tool for managing prompt versions. Pair this with detailed documentation of every change and a reliable version control system. This way, you can easily track updates, roll back if necessary, and maintain a consistent and reliable workflow.