Compare manual, automated, and hybrid (HITL) labeling—trade-offs in accuracy, speed, cost, scalability, and when to use each approach.

César Miguelañez

Jan 23, 2026

When it comes to preparing data for AI, the choice between manual annotation and automated labeling is all about balancing accuracy, speed, and cost. Here's the key takeaway:

Manual annotation excels in accuracy and handling complex tasks but is slow and expensive.

Automated labeling is fast and cost-effective for large datasets but struggles with nuanced or edge-case scenarios.

Many teams now use a hybrid approach (Human-in-the-Loop), combining automation's speed with human oversight for quality assurance.

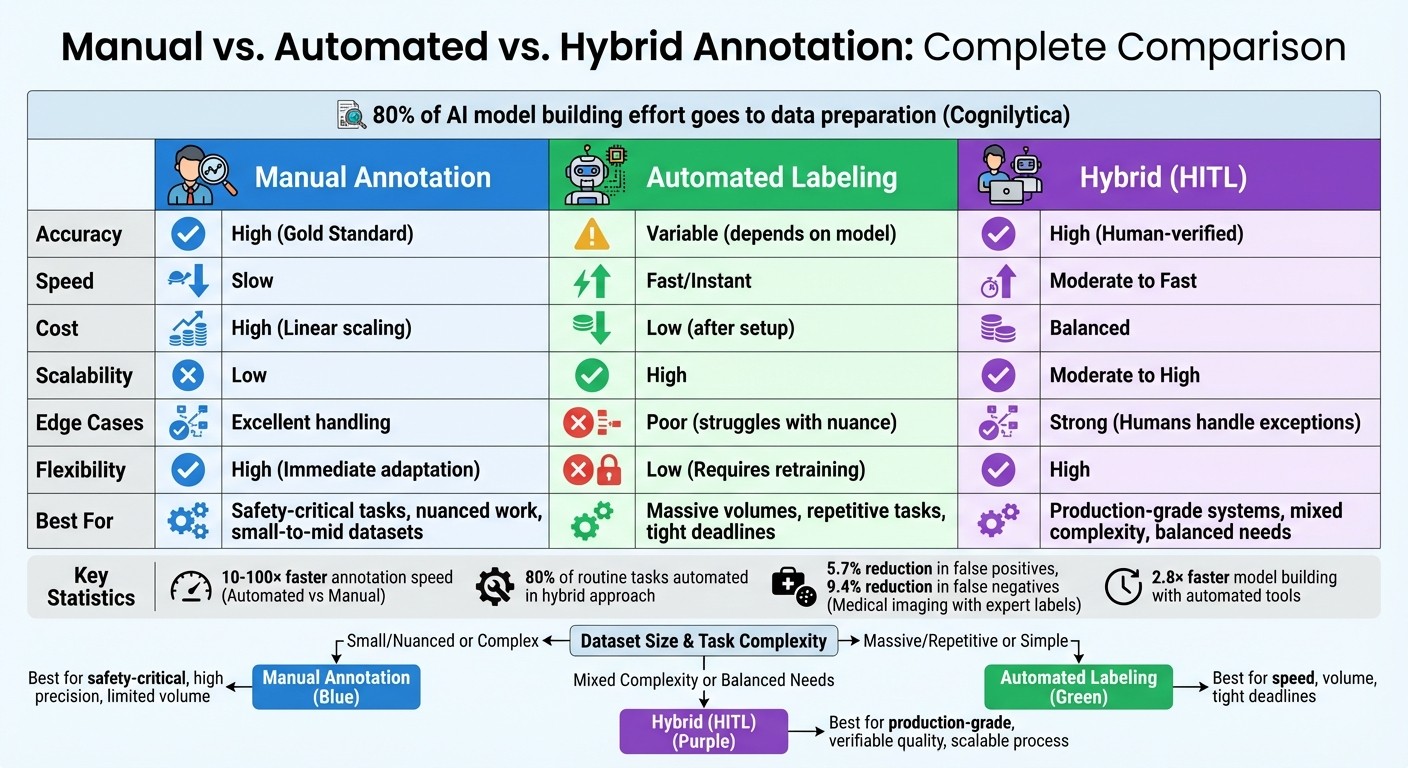

Quick Comparison

Factor | Manual Annotation | Automated Labeling | Hybrid (HITL) |

|---|---|---|---|

Accuracy | High | Variable | High (human-verified) |

Speed | Slow | Fast | Moderate to fast |

Cost | High | Low after setup | Balanced |

Scalability | Low | High | Moderate to high |

Edge Cases | Excellent handling | Poor | Strong |

Flexibility | High | Low (requires retraining) | High |

For small datasets or nuanced tasks, manual annotation is the better option. For large-scale, repetitive tasks, automated labeling is more practical. Hybrid methods work well for balancing efficiency and accuracy, making them a popular choice for production-ready systems.

Manual vs Automated vs Hybrid Data Annotation Comparison

Manual Annotation: Strengths and Limitations

Manual annotation relies on human expertise to label data, offering exceptional accuracy for AI projects that demand precision. However, its effectiveness can be tempered by challenges in scalability and efficiency. Let’s break down its strengths and limitations.

Strengths of Manual Annotation

Human annotators bring a level of judgment and context that machines often lack. They can interpret sarcasm, subtle cues, and ambiguous scenarios that automated systems struggle to process. For instance, distinguishing between a lively crowd at a food truck and a potentially aggressive mob - two situations with similar motion patterns - requires human insight to evaluate multiple data signals.

"Manual labeling... is the gold standard for data quality." - Snorkel AI

In specialized fields, manual annotation is often irreplaceable. Tasks like analyzing medical images, reviewing legal documents, or ensuring financial compliance demand subject-matter experts who understand the high stakes involved. For example, a study in medical imaging showed that an AI system trained on expert-labeled mammograms reduced false positives by 5.7% and false negatives by 9.4%, outperforming human radiologists in these critical metrics.

Manual annotation also shines when handling edge cases. Automated systems often falter with out-of-distribution data, such as recognizing new vehicle types like e-scooters in traffic footage. In safety-critical applications like autonomous driving or aerospace, human oversight becomes essential to ensure reliable decision-making.

Limitations of Manual Annotation

While manual annotation is precise, it comes with notable downsides. The cost of scaling manual efforts is a significant barrier, as the expense grows directly with the volume of data. For large datasets requiring millions of labels, this approach can quickly become financially unsustainable.

"Data preparation - collecting, cleaning, structuring, and data labeling for training data preparation soaks up the lion's share of effort for building AI Data Annotation models (Cognilytica research surveys show about 80%)." - Vikram Kedlaya, Taskmonk

Speed and scalability remain persistent challenges. Expanding manual pipelines means hiring, training, and managing additional staff, which can create bottlenecks during periods of high demand. Additionally, large annotation teams often face "annotation drift", where inconsistencies emerge over time due to varying judgments among annotators. Unlike automated systems that can adapt quickly, manual datasets often require complete relabeling when project goals evolve.

Privacy concerns add another layer of difficulty. Manual annotation may expose sensitive or personally identifiable information (PII) to human workers, requiring strict compliance measures to protect data. These frameworks can introduce additional costs and complexity.

Automated Labeling: Capabilities and Constraints

Automated labeling offers a cost-effective way to manage data preparation by enabling scalable processes. Once a pipeline is established, it can handle millions of data points with minimal additional costs. This efficiency makes large-scale labeling projects - like the ImageNet dataset, which required "person-years" of manual effort - far more achievable. Automated systems can compress those timelines significantly. However, this convenience comes with limitations, particularly in areas like contextual understanding and error handling.

Benefits of Automated Labeling

The most apparent advantage of automated labeling is speed. These systems can process data points 10–100 times faster than manual methods, labeling millions of entries within seconds after the initial setup. In fact, subject matter experts using automated tools have reported building models 2.8 times faster and achieving a 45.5% improvement in performance compared to manual approaches.

Another key benefit is consistency. Automated systems apply the same logic uniformly across tasks, which is especially useful for high-agreement activities like barcode recognition, structured text analysis, and basic object detection. This uniformity eliminates the annotation drift often seen in large manual teams. Additionally, automated labeling allows for quick adjustments when project requirements or data distributions change. Instead of manually reviewing each data point, a few modifications to the labeling functions can regenerate the dataset at "computer speed".

"Data preparation - collecting, cleaning, structuring, and data labeling... soaks up the lion's share of effort for building AI Data Annotation models."

Cognilytica

Programmatic labeling also creates a transparent record for each label, linking it back to specific functions or heuristics. This traceability makes it easier to identify, audit, and correct biases compared to the often opaque decisions made by human annotators.

Constraints of Automated Labeling

Despite its advantages, automated labeling has its challenges. One significant limitation is its lack of contextual understanding. Automated systems struggle with nuances like idiomatic expressions, sarcasm, or ambiguous visual scenarios. For instance, Google's PaLM foundation model scored only a 50 F1 score on a specialized banking chatbot task, and even with focused prompt engineering, it reached just 69 - a performance level still inadequate for enterprise use.

Another issue is the potential for errors to propagate silently. If an algorithm is flawed, it can spread inaccuracies across an entire dataset, leading models to learn incorrect patterns. Automated systems are also highly sensitive to data shifts, such as changes in slang, lighting conditions in images, or the introduction of new objects like e-scooters in traffic footage. Studies have shown that when experts rely too heavily on pre-populated suggestions from model-assisted labeling, they may accept incorrect annotations and take less initiative in improving the dataset.

Setting up automated labeling systems requires a significant investment of technical expertise and resources. Once operational, these systems need regular tuning and monitoring to stay effective. Without proper maintenance, pipelines risk becoming outdated and inaccurate over time.

Manual vs. Automated Annotation: Key Differences

Manual annotation is known for its precision and ability to capture subtle nuances, but it’s often slow and costly. On the other hand, automated labeling processes data at lightning speed once configured but can falter when dealing with context, sometimes spreading errors across an entire dataset without detection. Let’s break down how these two methods differ in terms of cost and adaptability.

When it comes to expenses, the two approaches have fundamentally different cost structures. Manual labeling costs rise steadily as the dataset grows. Automated systems, however, require a significant initial investment for setup and technical expertise. But once operational, the cost per label drops significantly, making automation a practical choice for handling large datasets - especially when minor inaccuracies are acceptable.

Adaptability is another major point of contrast. Human annotators can quickly adjust to new guidelines or handle unusual, edge-case scenarios. Automated systems, on the other hand, require updates to their rules or retraining to accommodate changes, like the emergence of new slang or shifting data trends.

"Competitive edge now comes from the data quality, annotation consistency, and the velocity of your labeled-data pipeline." - Vikram Kedlaya, Taskmonk

Side-by-Side Comparison

Here’s a quick snapshot of how manual, automated, and hybrid (Human-in-the-Loop or HITL) approaches stack up:

Factor | Manual Annotation | Automated Labeling | Hybrid (HITL) |

|---|---|---|---|

Accuracy | High (Gold Standard) | Variable; depends on model | High (Human-verified) |

Speed | Slow | Fast/Instant | Moderate to Fast |

Cost | High (Linear scaling) | Low (after setup) | Balanced |

Scalability | Low | High | Moderate to High |

Edge Cases | Excellent handling | Poor; struggles with nuance | Strong (Humans handle exceptions) |

Flexibility | High (Immediate adaptation) | Low (Requires retraining) | High |

Today, many production teams lean toward hybrid solutions. In this model, automation takes care of about 80% of routine tasks, while human experts step in to manage complex or high-stakes decisions. This approach strikes a balance between speed and the level of accuracy required for critical applications.

Hybrid Annotation: Combining Manual and Automated Methods

The hybrid annotation approach merges the precision of human oversight with the speed of automation, creating a balanced and efficient method for data labeling. In a human-in-the-loop (HITL) setup, automation takes the first step by pre-labeling data based on learned patterns. Then, human experts step in to review, correct, or flag any inaccuracies, ensuring the automated process is refined by their expertise.

An added layer of efficiency comes from confidence-based routing. Here, the system assigns a confidence score to each automated label. High-confidence predictions are either auto-approved or reviewed in batches, while uncertain cases are escalated to human experts. This ensures that errors don’t cascade across large datasets, and the process becomes part of a continuous improvement loop.

As humans correct errors and resolve ambiguities, these updates are fed back into the model, which retrains and improves over time. This cycle of learning and refinement significantly enhances the model’s accuracy.

"The human cannot be pulled out of the loop entirely - they've got the domain knowledge the model needs." - Braden Hancock, Head of Technology, Snorkel AI

Benefits of Hybrid Methods

Hybrid workflows have proven effective across a variety of industries, adapting to different needs and challenges. For example:

In healthcare, pre-trained models can identify organs or flag anomalies in radiology scans, which radiologists then validate to ensure safety-critical accuracy.

In retail, Taskmonk reported in 2025 that a tech company reduced customer activation time from 2 hours to just 10 minutes by using hybrid labeling for product data.

In finance, models automatically process structured fields from invoices or KYC documents, while human reviewers handle ambiguous handwriting or unusual layouts.

The cost savings are striking. Reducing labeling time by just 10% for a dataset of 1 million images can save over 16,000 hours of labor. For subject matter experts billing at $100+ per hour, this translates into substantial financial savings. Hybrid methods allow experts to focus on complex tasks while automation handles repetitive ones.

These workflows are also highly adaptable. For instance, when new product categories appear in retail or medical terminology evolves, human annotators can adjust quickly while the model retrains in the background. Experts can even create simple "labeling functions" that encode their knowledge, enabling the system to apply these rules to millions of data points instantly - speeding up annotation by 10× to 100× compared to manual methods.

"Accelerate workflows and reduce costs by automating the most repetitive or straightforward tasks." - CVAT Team

Choosing the Right Annotation Method

Selecting the best annotation method hinges on six key factors: dataset size, task complexity, accuracy requirements, timeline, budget, and data privacy. Each project needs a tailored approach, balancing these elements much like the earlier trade-offs between manual precision and automated scalability.

Dataset Size:

For smaller datasets, manual annotation works well, but as the dataset grows, costs can skyrocket. When you’re dealing with millions of data points, automated or programmatic methods become crucial to maintain efficiency while keeping expenses in check. This aligns with the scalability challenges discussed earlier.

Task Complexity:

The complexity of the task determines whether human input is necessary. Manual annotation is ideal for intricate tasks requiring context, like sarcasm detection, sentiment analysis, or medical imaging. On the other hand, automated methods shine in repetitive, pattern-based tasks like barcode recognition or basic bounding box creation. In safety-critical fields such as healthcare, aerospace, or autonomous driving, manual annotation or close human oversight is essential to ensure the highest level of accuracy.

Timeline and Budget:

Manual labeling costs grow linearly with the number of labels. While automated annotation might require a higher initial investment, its cost per label decreases significantly at scale, making it the better option for tight deadlines and large datasets.

Data Privacy:

Sensitive data, like medical or financial information, often calls for internal manual annotation to reduce privacy risks. Hybrid approaches can be beneficial when taxonomies or data distributions change frequently, allowing for quick adjustments by updating functions instead of re-labeling everything manually.

These factors can be distilled into the following decision framework:

Decision Criteria

Factor | Use Manual | Use Automated | Use Hybrid |

|---|---|---|---|

Dataset Size | Small to mid-sized | Massive volumes | Mid to large |

Task Type | Nuanced, subjective | Repetitive, structured | Mixed complexity |

Accuracy Needs | Safety-critical | Pattern-based | Production-grade |

Timeline | Flexible | Tight deadlines | Moderate |

Budget | High per label | Low at scale | Balanced |

When to Choose Each Method:

Here’s how these criteria translate into practical recommendations:

Manual Annotation:

Manual annotation is the go-to for creating an initial "gold set" to train automation models, working with new taxonomies, or handling safety-critical tasks. For example, Google DeepMind used labeled mammograms from over 25,000 women in the UK and 3,000 in the US to train an AI system, achieving a 5.7% reduction in false positives in the US and a 1.2% reduction in the UK.

Automated Labeling:

Automated labeling is best for large datasets with consistent patterns, especially when a reliable pre-trained model is available. In 2025,

BMW’s Regensburg plant adopted an AI system to analyze camera data for paint imperfections, drastically speeding up quality control compared to manual inspections.

Hybrid Methods:

Hybrid approaches are ideal for balancing accuracy and cost. For instance, confidence-aware triage can be implemented, where high-confidence automated labels are batch-approved, and low-confidence cases are reviewed by humans. Programmatic labeling can boost annotation speed by 10× to 100× compared to manual methods. Regularly auditing about 5% of high-confidence automated labels helps prevent model drift and maintains quality over time.

Conclusion

Your annotation strategy should align with the specific needs of your project. Manual annotation is ideal for tasks that demand precision, like safety-critical or highly nuanced projects. On the other hand, automated labeling offers speed and cost-effectiveness, particularly when scaling. A hybrid approach combines the strengths of both, striking a balance between accuracy and efficiency. Ultimately, your choice should reflect your timeline and budget.

"Competitive edge now comes from the data quality, annotation consistency, and the velocity of your labeled-data pipeline, which determines how fast models improve." - Vikram Kedlaya, Taskmonk

This perspective highlights the importance of fostering strong collaboration across teams.

When product and engineering teams work closely together, and there’s a shared commitment to refining data continuously, model performance can reach new heights. Product teams bring domain expertise and define the requirements, while engineering teams design pipelines to maintain and enhance model performance. By aligning on key elements like ontologies, confidence thresholds, and quality gates, these teams can establish annotation workflows that are both fast and dependable.

Treating your data as a constantly evolving product further strengthens this foundation. Whether you’re creating a gold standard for a new domain, managing millions of labels, or tackling tricky edge cases, choosing the right annotation strategy will not only streamline production but also deliver meaningful results.

FAQs

How can I choose between manual annotation and automated labeling for my project?

Choosing between manual annotation and automated labeling comes down to what your project needs in terms of accuracy, speed, and scalability.

Manual annotation is known for its precision and works best for tasks that require a deep understanding or involve intricate data. That said, it can be a slow process and tends to be more expensive, especially when working with large datasets.

On the flip side, automated labeling is quicker and more budget-friendly, making it a great option for large-scale datasets or projects that demand fast turnarounds. However, it might not match the accuracy of manual annotation in more nuanced or complex cases. A mix of both approaches often strikes the right balance. For instance, automated methods can handle bulk tasks efficiently, while manual review can step in for the trickier parts to ensure accuracy.

To decide the best approach, consider your project's data complexity, deadlines, and budget. Often, a hybrid method offers the flexibility and results you need.

What are the advantages of combining automated labeling with manual annotation?

A hybrid approach to data annotation combines the speed and scalability of automated tools with the precision and contextual insights that only human expertise can provide. This fusion ensures better data quality, as humans step in to tackle complex or ambiguous cases that automated systems might struggle with.

It also enhances efficiency and scalability by leveraging automation for processing large datasets quickly, while reserving human effort for refining the more intricate or critical parts. Plus, as humans provide feedback, automated tools improve over time, creating a feedback loop that boosts accuracy and reduces errors.

By blending automation with human oversight, this approach offers a cost-efficient, precise, and scalable solution, making it a strong choice for preparing high-quality data to train machine learning models.

What can be done to improve automated labeling for complex tasks?

Automated labeling systems can tackle complex tasks more effectively by integrating human-in-the-loop workflows. This method leverages human expertise to double-check and fine-tune AI-generated labels, making it especially useful for cases where subtle or nuanced distinctions are involved. By combining the speed of AI with human judgment, the process becomes more accurate and less prone to errors.

Another powerful approach is task-specific prompt optimization. This involves fine-tuning AI models to better grasp the context of the data they’re working with. Through iterative adjustments and frequent comparisons with human-generated benchmarks, these systems can align more closely with human decision-making. On top of that, programmatic labeling can handle repetitive tasks automatically, while still allowing human experts to step in and refine the results when needed. Together, these strategies offer a scalable and precise way to manage even the most intricate labeling challenges.