Build, evaluate and improve your LLM within one end-to-end workflow

Everything in one place

Design your prompts

AI-enhanced prompt editor to design and test your prompts at scale

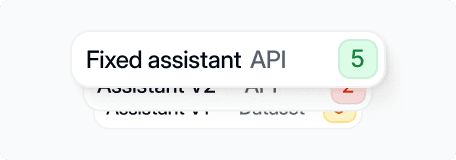

Evaluate, compare & refine

Evaluate with LLM-as-judge, human-in-the-loop, or ground truth evals easily using real or synthetic data

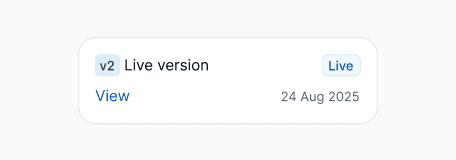

Deploy with confidence

Publish new prompts directly from Latitude and integrate them easily into production

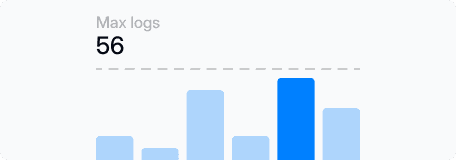

Observe in production

Monitor and debug your agents and prompts in production

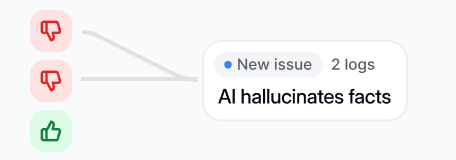

Discover failure patterns

Start annotating to discover issues in your AI, our AI will follow and automate the process

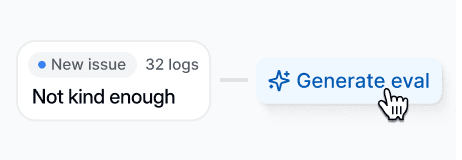

Generate AI evaluations

Generate LLM evaluations based on the issues in your AI to track escalations

Ship reliable AI 10X faster

Without hiring anyone

Build self-improving AI

Enter the Reliability Loop

Go to devs page

See executions in Latitude

Get full observability of your AI in the dashboard

Annotate executions

Annotate your AI’s performance & collaborate with your team

Discover issues

Detect failure patterns present in your project and iterate your prompts

Create evaluations

Create custom evaluations to track issues and detect regressions

Get started now

Stop building blinded

Build reliable AI with no code needed already now, start for free

1

Watch your AI

Start annotating to discover issues in your AI, our AI will follow and automate the process

2

Detect failure patterns

Detect false patterns and generate custom evaluations to track these issues

3

Observe & Iterate

Latitude now does all the issue tracking so that you can dedicate more time to actually building

Frequently asked questions

Latitude Data S.L. 2025

All rights reserved.

People are already building

You’re not the first AI pm.

Don’t be the last one.

Latitude is amazing! It’s like a CMS for prompts and agents with versioning, publishing, rollback

Alfredo

@ Audiense