Explore top open-source tools for real-time prompt validation, enhancing AI reliability and efficiency in LLM workflows.

César Miguelañez

Feb 1, 2025

Prompt validation is essential for improving AI reliability and efficiency. Open-source tools now make it easier to test, refine, and optimize prompts for Large Language Models (LLMs). Here’s a quick overview of the top tools for real-time prompt validation:

Promptfoo: Validates prompts locally with debugging tools, CI/CD integration, and multi-model comparisons.

Agenta: Combines prompt engineering, version control, and evaluation in one platform for large-scale projects.

Helicone: Tracks performance, prevents prompt injection risks, and offers cost analytics with a focus on production environments.

LangSmith: Provides advanced debugging, testing, and observability for LangChain-based workflows (not open-source).

Latitude: Focuses on collaboration, version control, and real-time validation for production-grade prompt engineering.

Quick Comparison Table

Feature | Promptfoo | Agenta | Helicone | LangSmith* | Latitude |

|---|---|---|---|---|---|

Validation | Advanced tools | Parameter testing | Real-time testing | Interactive tools | Real-time engine |

Collaboration | Basic sharing | Multi-user space | Team collaboration | Version control | Expert workflows |

Integration | Multi-LLM support | Platform-specific | Comprehensive logs | LangChain-focused | Major LLM platforms |

Monitoring | Core metrics | Parameter tracking | Usage analytics | Performance metrics | Production monitoring |

*LangSmith is not open-source but integrates with open-source tools.

These tools help teams reduce errors, improve reliability, and optimize costs in LLM workflows. Start with Promptfoo for basic testing, scale with Helicone, and use Latitude for expert collaboration.

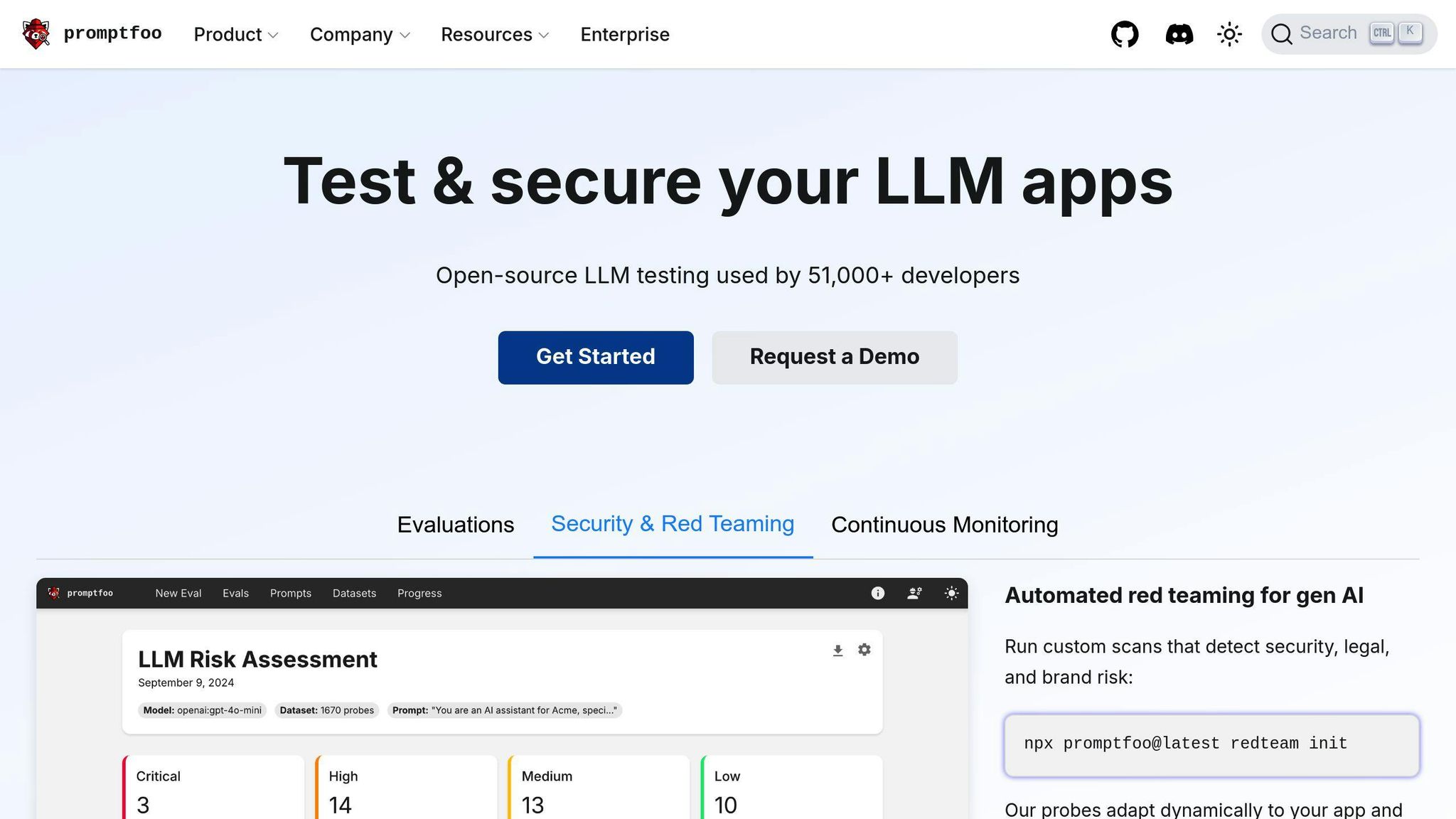

1. Promptfoo

Promptfoo is an open-source tool designed to streamline LLM evaluation and validate prompts in real-time. Because it runs entirely on your local machine, your sensitive data stays secure while you test and refine your prompts.

The tool offers dual validation and seamless integration features. It ensures responses meet specific formatting, like JSON, and checks for criteria such as language requirements, summary length, and keyword usage. Promptfoo works with many LLM providers, including OpenAI, Anthropic, Azure, Google, HuggingFace, and models like Llama. This makes it easy to compare multiple models side-by-side to fine-tune your prompt engineering process.

For development teams, Promptfoo provides a collaborative environment with several key features:

Feature | Description |

|---|---|

Web GUI | Explore test results interactively and share findings easily |

Matrix Views | Evaluate outputs from multiple prompts and inputs at a glance |

CI/CD Integration | Automate testing in continuous integration workflows |

Caching System | Speeds up performance with intelligent result storage |

Its debugging tools are especially helpful for real-time validation. When working with RAG (Retrieve, Augment, Generate) pipelines, Promptfoo gives detailed insights into each step. This helps teams quickly spot and fix issues, ensuring smooth performance in production.

Installation is straightforward using npm or brew, and the tool’s user-friendly design ensures accessibility for non-engineers while offering the depth needed for complex enterprise use cases.

With its local-first approach and detailed testing capabilities, Promptfoo is a reliable choice for maintaining high standards in LLM projects. It’s a must-have for anyone serious about prompt engineering, offering a strong foundation for secure, effective implementation.

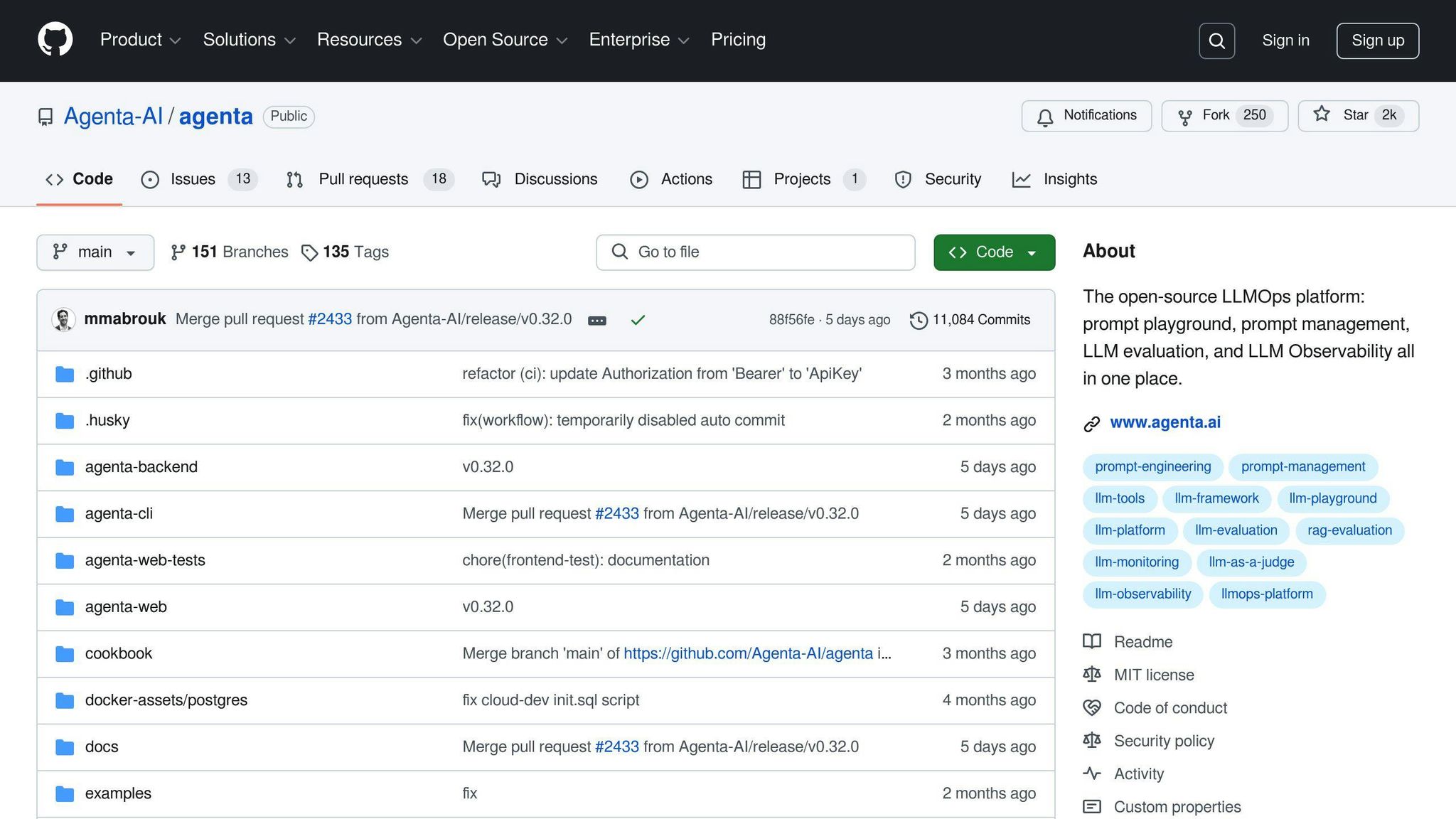

2. Agenta

Agenta is an open-source platform designed for managing LLM operations (LLMOps). It combines prompt engineering, version tracking, evaluation, and monitoring into one system, offering a structured way to ensure AI outputs meet expectations through detailed assessments.

The platform includes an interactive playground for testing and refining prompts in real time. It’s built to handle complex workflows like Retrieval-Augmented Generation (RAG) and prompt chains, all while maintaining precise version control.

For teams working on large-scale LLM projects, Agenta provides several collaborative tools:

Feature | Purpose |

|---|---|

Version Control | Keep track of changes and ensure consistency across updates |

Environment Management | Deploy prompts to specific environments with version tracking |

Evaluation Tools | Connect prompt variations to experiments and monitor performance metrics |

Debugging Interface | Pinpoint and resolve issues quickly by identifying root causes |

Agenta also reduces maintenance with its self-healing features, which automatically adjust test scripts to match UI or API changes. It supports both code-based and web UI workflows for creating prompts, catering to both technical and non-technical users. Detailed performance tracking for each prompt allows teams to analyze metrics and make improvements as needed.

The platform fosters collaboration by offering a structured workspace where product owners and specialists can work together to design prompts while maintaining high standards and version tracking. For troubleshooting, users can toggle specific action groups or knowledge bases to address agent behavior issues, ensuring prompts deliver the desired results.

With its broad feature set, Agenta provides a reliable solution for teams looking to streamline prompt validation and management. Up next, we’ll dive into another tool making waves in this space.

3. Helicone

Helicone is an open-source platform designed to simplify real-time prompt validation. Think of it as a version control system for prompts, allowing developers to manage, refine, and improve their LLM applications effectively. Its Gateway architecture supports detailed logging and handles large request loads, making it a great fit for teams dealing with complex LLM workflows.

For those focused on improving prompts, Helicone provides a range of useful features:

Feature | What It Does | Why It Matters |

|---|---|---|

Real-time Validation | Test multiple prompt versions at once | Speeds up iteration cycles |

Performance Tracking | Tracks usage, latency, and effectiveness | Enables data-driven changes |

Security Suite | Detects and prevents prompt injection risks | Keeps applications secure |

Edge Caching | Speeds up response delivery | Cuts costs and reduces lag |

The Sessions feature is especially helpful for debugging. It groups and visualizes multi-step LLM interactions, making it easier to spot and fix issues in complex workflows. A great example of this is QA Wolf, which used Helicone to fine-tune its agents until they reached 100% accuracy.

Helicone also offers a Prompt Management system that tracks and compares different prompt versions without disrupting workflows. This is particularly useful for teams that want to analyze variations against historical data. Plus, with cost analytics and a free tier, teams can experiment with strategies while keeping expenses in check. For those who need full control, Helicone can be self-hosted, giving organizations complete oversight of the validation process.

Beyond basic validation, Helicone includes moderation tools and security features to ensure outputs remain safe and appropriate. Its combination of validation tools and security measures makes it a strong choice for any LLM workflow. Up next, we’ll look at LangSmith, another tool making waves in prompt validation.

4. LangSmith

LangSmith is designed to simplify real-time prompt validation and streamline LLM development. Created by the LangChain team, it offers tools for debugging, testing, and evaluating prompts while ensuring production-level reliability.

One standout feature is its observability tools, which provide a clear view of how prompts perform in practical scenarios. Developers can track response times, monitor error rates, and analyze feedback trends through a user-friendly interface that maps out the entire prompt processing workflow.

Feature Category | Capabilities | Benefits |

|---|---|---|

Debugging Tools | Chain visualization, Trace logging | Pinpoints bottlenecks and reasoning errors |

Testing Suite | Dataset management, Automated evaluations | Ensures prompt consistency and reliability |

Production Analytics | Performance monitoring, Usage metrics | Enables data-driven improvements |

Collaboration | Prompt crafting hub, Version control | Simplifies teamwork and version management |

What sets LangSmith apart is its ability to analyze the interactions between chains, agents, and tools. The Playground feature allows developers to tweak inputs and parameters in real time, speeding up iterations without requiring code changes.

LangSmith also supports strong evaluation workflows, including integration with Ragas metrics to assess context precision, faithfulness, and relevancy. Its effectiveness was highlighted in a recent review:

"LangSmith provides essential tools for observability, evaluation, and optimization." - Cohorte Team

With simple API calls, teams can gather and analyze user feedback during beta testing. The platform's test suites use real-world datasets, while the chain visualization feature helps quickly identify and fix prompt failures.

Although LangSmith isn't open-source, it works seamlessly with multiple LLM frameworks beyond LangChain. This flexibility makes it a practical choice for teams that rely on open-source tools while needing advanced testing and analytics.

LangSmith stands out for its detailed observability features and robust testing tools, making it a strong option for teams focused on refining LLM performance. Up next, we’ll take a closer look at Latitude, another key tool in this field.

5. Latitude

Latitude is an open-source platform crafted for developing production-grade features for large language models (LLMs). It bridges the gap between domain experts and engineers, creating a shared space for prompt engineering and validation.

One of its standout features is real-time validation, which helps catch issues early, minimizes unnecessary API calls, and cuts down on deployment costs.

Feature | Capability | Impact |

|---|---|---|

Collaborative Tools | Multi-user prompt crafting and testing | Improves communication between experts and developers |

Validation Engine | Real-time prompt structure verification | Reduces errors and boosts response reliability |

Integration Support | Works with major LLM platforms | Fits smoothly into existing workflows |

Testing Framework | Systematic prompt evaluation | Maintains quality and prevents regression |

Latitude also includes debugging tools that provide detailed performance insights. This allows teams to quickly detect and fix issues using real-world data.

The platform benefits from a strong community, offering helpful documentation and resources for beginners while sharing advanced techniques for experienced users.

Latitude is built with production readiness in mind, offering tools like:

Version Control: Tracks prompt changes and their impact on performance.

Performance Monitoring: Analyzes prompt effectiveness in real time.

As an open-source tool, Latitude invites community contributions and allows for customization. Its seamless integration into workflows makes it a practical choice for teams focused on refining prompt validation.

With its collaborative features and robust tools, Latitude helps ensure quality and consistency in prompt engineering, making it a strong option for real-time validation needs.

Comparison Table

Here's a side-by-side look at some of the leading open-source tools for real-time prompt validation, highlighting their main features to help you decide which one fits your needs best.

Feature | Promptfoo | Agenta | Helicone | LangSmith* | Latitude |

|---|---|---|---|---|---|

Validation & Testing | Advanced validation tools | Parameter testing | Advanced production testing | Interactive testing tools | Real-time validation engine |

Integration Options | Multiple LLM providers | Platform-specific | Comprehensive logging | LangChain-focused | Major LLM platforms |

Community Support | Active GitHub | Open-source resources | Documentation hub | Extensive resources | Strong community |

Collaboration & Versioning | Basic sharing tools | Multi-user workspace | Team collaboration | Dataset management | Complete version control |

Monitoring | Core metrics | Parameter tracking | Usage and cost analytics | Performance metrics | Production monitoring |

*Note: LangSmith is not open-source but integrates with open-source tools.

Promptfoo offers structured testing to reduce LLM hallucinations and includes advanced validation tools.

Agenta supports experimental workflows, making it easier to refine prompts through collaborative testing.

Helicone stands out with its detailed analytics and extensive logging capabilities.

LangSmith is particularly useful for LangChain-based projects, offering streamlined testing tools.

Latitude emphasizes production-level features, with strong monitoring and version control.

Each tool brings something different to the table, enhancing your ability to validate and fine-tune prompts effectively.

Conclusion

Developers are creating dependable and cost-effective AI solutions by refining prompt validation processes. These tools have shown their importance in day-to-day LLM workflows. For example, Promptfoo improves reliability through structured testing, while Helicone helps cut API expenses with its detailed logging and analytics features. The open-source community plays a key role in driving these advancements forward.

We welcome contributions! Check out our contributing guide to get started.

For those ready to dive into real-time prompt validation, here’s a simple approach:

Begin with Promptfoo to handle basic validation tasks.

Leverage Helicone for scaling in production environments.

Partner with Latitude to access expert-engineer workflows.

Following these steps can help teams kick off their prompt validation efforts effectively. Current trends show that prompt validation is paving the way for better AI integration and scalability in LLMs. By engaging with open-source projects, developers can actively shape the future of scalable and integrated prompt validation.

Each tool mentioned here plays an important role in fostering a collaborative space where teams can refine their workflows and achieve better outcomes. Effective prompt validation ensures that AI systems are reliable, efficient, and user-friendly.