Explore essential techniques for reducing latency in LLM streaming, focusing on hardware, software optimization, and advanced processing methods.

César Miguelañez

May 12, 2025

Reducing latency in large language model (LLM) streaming is essential for real-time applications like chatbots and live translation. Here's a quick guide to the main strategies:

Hardware Acceleration: Use specialized hardware like NVIDIA H100 GPUs or AWS Inf2 instances to boost speeds by 2–10×, processing up to 300 tokens per second.

Software Optimization: Techniques like token compression (20–40% faster generation) and smart context management improve response times for interactive applications.

Streaming Responses: Deliver text incrementally for a smoother user experience without waiting for full responses.

Scaling with Tensor Parallelism: Reduces latency by up to 33% for larger batch sizes, ideal for high-volume processing.

Monitoring Tools: Track performance metrics like time-to-first-token (TTFT) to fine-tune systems.

Quick Comparison

Optimization Approach | Token Throughput | Latency Reduction | Best Use Case |

|---|---|---|---|

AWS Inf2 / Groq Chips | 100–300 tokens/s | 2–10× improvement | High-volume production |

NVIDIA H100 GPUs (Batch 16) | Configuration-dependent | 52% reduction | Scalable operations |

Token Compression | N/A | 20–40% faster | Long-form content |

Smart Context Management | N/A | Faster first token | Interactive apps |

These methods help balance speed, cost, and efficiency, making LLM streaming smoother and more responsive for various workloads.

Core Latency Optimization Methods

Reducing latency in LLM streaming requires a combination of hardware and software improvements working together.

Hardware Acceleration Techniques

To tackle latency, specialized hardware plays a key role. For instance, inference-optimized hardware like AWS Inf2 instances and Groq chips can boost token throughput by 2–10×, achieving speeds of 100–300 tokens per second on large models like Llama 70B. Similarly, NVIDIA’s H100-80GB GPUs, with 2.15× the memory bandwidth of the A100-40GB, provide substantial gains - offering 36% lower latency for single batch processing and up to 52% lower latency at a batch size of 16.

Software Optimization Strategies

On the software side, several strategies work to further cut down latency. Token compression, for example, reduces computational demand by compressing multiple tokens into a more efficient format, resulting in a 20–40% reduction in generation time. Smart context management focuses on processing only the relevant parts of a conversation, leading to faster responses.

Optimization Technique | Impact on Latency | Best Use Case |

|---|---|---|

Token Compression | 20–40% reduction | Long-form content generation |

Smart Context Management | Reduced time-to-first token | Interactive applications |

Continuous Batching | 2–10× throughput increase | High-volume processing |

Tensor Parallelism | 33% reduction at batch size 16 | Large-scale deployment |

Advanced Processing Methods

Dynamic approaches like in-flight batching manage inference requests in real time, optimizing throughput under varying workloads. Tensor parallelism offers another layer of improvement but is most effective with larger batch sizes. For example, with the MPT-7B model, parallelism only yields around a 12% improvement at a batch size of 1, but this jumps to a 33% latency reduction at a batch size of 16.

Response Generation Optimization

Adjusting how responses are generated can also make a big difference. Setting explicit length constraints in prompts - such as limiting responses to 50 words - helps minimize unnecessary token generation and keeps performance steady. Streaming responses, where content is delivered incrementally, creates a smoother user experience. This approach allows users to start engaging with the content immediately, mimicking the effect of watching someone type in real time.

1. GPU and TPU Performance Comparison

When it comes to optimizing latency for LLM streaming, deciding between Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) is a key choice. Both have their strengths, tailored to different needs, and understanding their performance, integration, workload fit, and cost implications can help make the right call.

Performance Characteristics

High-end GPUs, like NVIDIA's offerings, stand out for their high memory bandwidth and quick token generation - both crucial for LLM streaming tasks. On the other hand, Google's TPUs shine in matrix multiplication, a fundamental part of LLM inference. To compare these architectures effectively, focus on factors like memory bandwidth, peak performance, ideal batch size, and power efficiency.

System Integration Considerations

GPUs are versatile and integrate smoothly across a wide range of deployments. With tools like NVIDIA's CUDA, they work seamlessly with major LLM frameworks, making them adaptable to different infrastructures. TPUs, however, often require specific system setups and are typically accessed via cloud platforms, which can influence how and where they are deployed.

Workload-Specific Performance

The nature of the workload also plays a big role in performance. For real-time streaming with a focus on low latency, GPUs perform better at smaller batch sizes. TPUs, however, are more efficient in high-throughput scenarios, particularly when dealing with larger batch sizes.

Cost-Performance Balance

Cost is always a factor, and power efficiency can tip the scales. While TPUs may offer better power efficiency in some configurations, GPUs often provide greater scalability and flexibility. This makes GPUs a more cost-effective choice, especially for smaller-scale deployments. These considerations directly impact the responsiveness and efficiency of LLM streaming setups.

2. Purpose-Built Inference Hardware

Building on the comparison of GPUs and TPUs, purpose-built inference hardware takes latency reduction in LLM streaming a step further. Designed specifically for transformer inference, this hardware significantly improves streaming performance compared to standard systems.

Performance Capabilities

Purpose-built hardware like AWS Inf2 instances and Groq chips can process 100–300 tokens per second on models such as Llama 70B, delivering a 2–10× performance boost over conventional hardware setups. For example, the H100-80GB GPU showcases the advantages of specialized hardware:

36% lower latency at batch size 1

52% lower latency at batch size 16

2.15× higher memory bandwidth compared to A100-40GB GPUs

These improvements highlight how tailored hardware can unlock higher efficiency and speed.

Scaling and Batch Processing

One standout feature of purpose-built hardware is its ability to scale effectively with larger workloads. The impact of tensor parallelism on latency reduction varies by batch size:

Batch Size | Performance Improvement (4x vs. 2x Tensor Parallelism) |

|---|---|

Size 1 | 12% latency reduction |

Size 16 | 33% latency reduction |

This scalability is especially valuable in production environments where handling high concurrent loads is critical. Specialized hardware ensures consistent, low latency while also delivering better power efficiency and cost savings.

Power Efficiency Considerations

Another major advantage is improved power efficiency. Purpose-built chips are designed to reduce heat generation and cooling demands, resulting in a higher performance-per-watt ratio. This translates to lower operational costs while maintaining top-tier performance.

"You can't optimize what you don't measure", explains Graphsignal's performance monitoring documentation. This underscores the importance of tracking efficiency metrics to maximize the benefits of purpose-built hardware.

Monitoring and Optimization

To get the most out of these systems, performance monitoring tools like ArtificialAnalysis.ai are essential. They provide comprehensive benchmarks and continuous latency tracking, enabling fine-tuned hardware configurations. This ensures that LLM streaming achieves both high throughput and consistently low latency in demanding production environments.

3. LLM Streaming Frameworks

LLM streaming frameworks are designed to reduce latency by efficiently handling data and delivering text incrementally. This ensures smooth performance, even under varying workloads.

Core Framework Components

These frameworks rely on several essential components that work together to minimize delays:

Token Processing: Handles the step-by-step generation of text.

Memory Management: Ensures efficient use of resources and caching.

Queue Orchestration: Manages multiple requests simultaneously for better performance.

Stream Processing Improvements

Modern frameworks use smart techniques to balance speed and efficiency. For instance, token-level streaming sends text one piece at a time as it's generated, instead of waiting for the entire response to be ready. Parallel inference lets systems handle multiple requests at once, while smart caching avoids repeating the same computations. These advancements make it easier to integrate tools like Latitude, which bring together optimization and monitoring in one platform.

Integration Capabilities

Latitude demonstrates how frameworks can connect with existing systems using standard APIs and protocols. Its platform supports real-time processing, adjusts automatically to handle varying request volumes, and efficiently manages resources across different model setups. This integration also includes features for tracking performance in real time.

Performance Monitoring

To keep latency low, performance monitoring is essential. Frameworks keep an eye on key metrics like token generation speed, memory usage, and request queue times. These insights help maintain smooth and reliable system operations.

Resource Management

Effective resource management goes beyond just memory. It keeps track of overall system usage, including CPU and GPU workloads, ensuring all components perform efficiently while delivering consistent response times.

4. Latitude Platform Features

Latitude combines cutting-edge hardware and software techniques to take LLM streaming to the next level. It does this through focused prompt engineering and smooth integration into existing systems.

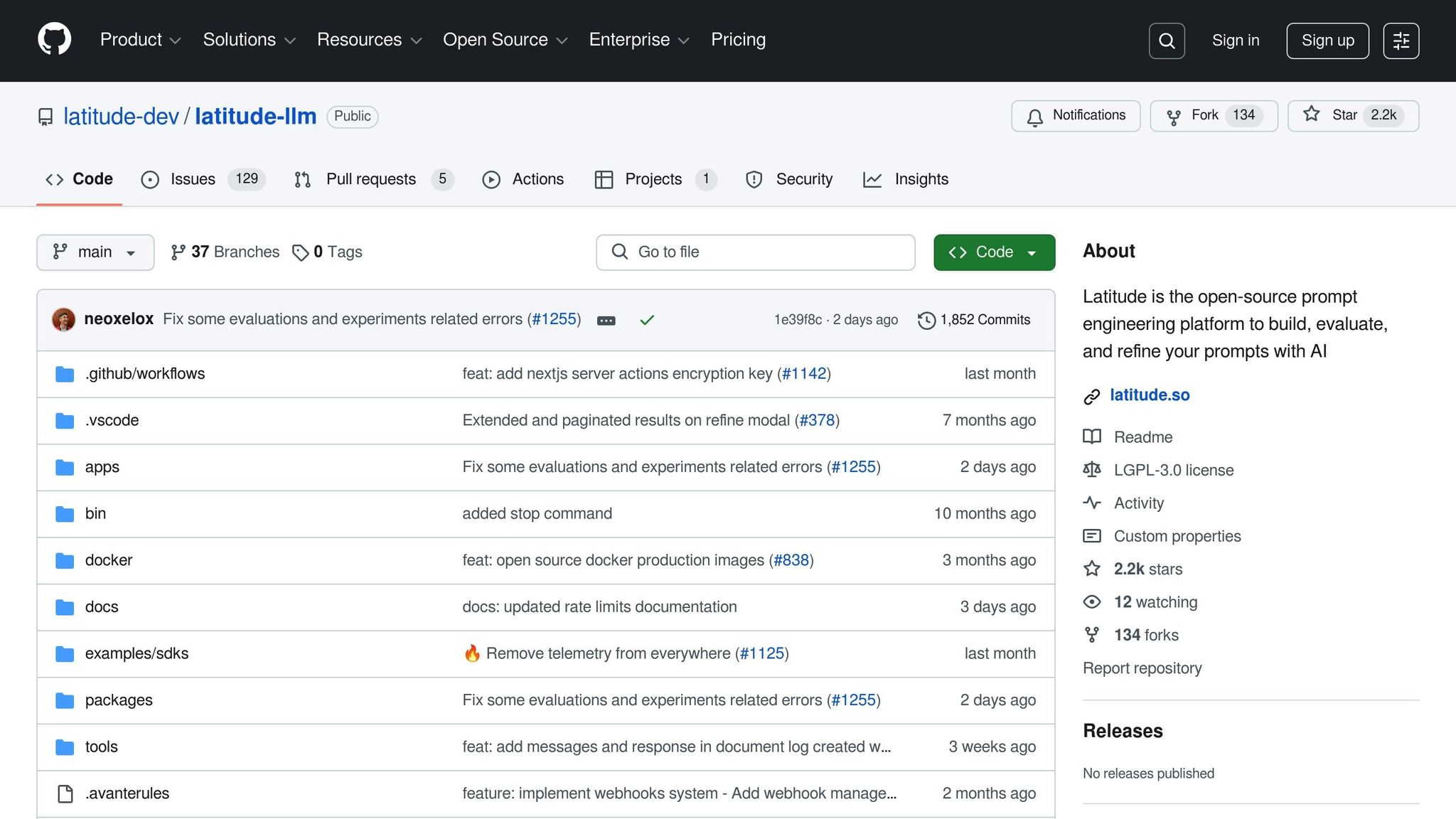

Latitude serves as an open-source platform for prompt engineering, bringing together domain experts and engineers to create production-ready LLM features.

Collaborative Development

One of Latitude’s standout strengths is how it encourages collaboration between experts and engineers. This teamwork helps refine prompt engineering and ensures LLM features meet real-world production needs.

Community Resources

Latitude thrives on community involvement. Its GitHub repository and Slack channels are buzzing with activity, offering detailed documentation and a shared space for best practices contributed by users.

Easy Workflow Integration

Latitude is designed to fit right into existing workflows. This makes it simple to deploy and scale LLM features that are ready for production.

Performance Comparison

When discussing hardware acceleration and specialized inference devices, performance comparisons reveal how these advancements achieve a balance between raw speed, integration challenges, and operational efficiency.

Real-world benchmarks indicate that hardware optimized for inference significantly outpaces traditional setups, often by a wide margin.

Hardware Performance Metrics

Take a closer look at some notable hardware performance figures:

AWS Inf2 instances and Groq chips: These deliver between 100–300 tokens per second on models like Llama 70B, offering a 2–10x improvement over standard configurations.

GPT-4o: Maintains a steady 80–90 tokens per second in production environments, providing reliable performance.

H100-80GB GPUs: Compared to their predecessor, the A100-40GB, these GPUs cut latency by up to 36% for single-batch processes and by 52% for batch sizes of 16.

Here’s how these metrics compare:

Optimization Approach | Token Throughput | Latency Reduction | Power Efficiency |

|---|---|---|---|

AWS Inf2/Groq | 100–300 tokens/s | 2–10x improvement | High |

GPT-4o | 80–90 tokens/s | Baseline | Moderate |

H100-80GB (Batch 1) | Configuration-dependent | 36% reduction | Moderate-High |

H100-80GB (Batch 16) | Configuration-dependent | 52% reduction | High |

Scaling and Parallelization Impact

Scaling through tensor parallelism further enhances performance. For example, increasing tensor parallelism from 2x to 4x reduces token latency by 12% in single-batch operations and by 33% for batch sizes of 16. This demonstrates how scaling strategies can significantly improve throughput and efficiency.

Integration Complexity Trade-offs

While purpose-built inference hardware offers impressive speed and efficiency, it often comes with added complexity. This includes tasks like installing specialized drivers, configuring custom libraries, retraining models, and making hardware-specific optimizations. These challenges highlight the trade-offs between achieving peak performance and managing integration hurdles.

Power Usage Considerations

Inference-optimized hardware doesn’t just excel in speed; it’s also more energy-efficient compared to traditional GPU setups. This efficiency translates into several benefits: reduced operational costs, lower heat output, and better performance per watt, all of which contribute to more sustainable operations.

Summary and Recommendations

Building on earlier insights into hardware and frameworks, here’s a breakdown of best practices to help reduce latency effectively:

High-Priority Optimizations

Start with these immediate actions:

Response Streaming Implementation: Streaming allows users to see responses as they’re generated, creating the impression of faster performance without requiring hardware upgrades.

Smart Context Management: Refine context usage by including only essential conversation history and setting clear response limits to optimize processing.

Hardware Selection Based on Workload: Choose hardware tailored to your specific needs. Here’s a quick guide:

Workload Type | Recommended Hardware | Best For |

|---|---|---|

High-volume Production | AWS Inf2 / Groq chips | Enterprise applications |

Standard Production | GPT-4o | Mid-size deployments |

Variable Workloads | H100-80GB GPU | Scalable operations |

These steps provide a strong foundation for more advanced techniques.

Advanced Optimization Strategy

To maximize performance, consider these advanced methods:

Hardware Acceleration: Opt for inference-optimized solutions that can deliver 2–10x performance gains compared to standard setups.

Parallelization and Scaling: Use tensor parallelism wisely. For example, increasing parallelism from 2x to 4x can cut token latency by 33% for a batch size of 16.

Monitoring and Optimization: Track metrics like time to first token (TTFT), token generation rate, request latency, and user engagement. This data helps identify areas for further refinement.

Use Case-Specific Recommendations

For customer-facing applications, focus on streaming responses and smart context management to enhance user experience with faster perceived response times.

For high-volume processing, prioritize hardware like AWS Inf2 instances or Groq chips, which can handle large models (e.g., Llama 70B) and achieve speeds of 100–300 tokens per second.

For development environments, rely on flexible, cloud-based solutions with auto-scaling to handle varying workloads efficiently.

Adjust these strategies to match your operational goals while balancing cost and performance.

Balancing Cost and Performance

Follow this step-by-step approach to optimize both cost and efficiency:

Start with no-cost improvements, such as better prompt engineering and context management.

Introduce streaming responses to deliver immediate user experience enhancements.

Invest in hardware acceleration when processing volumes justify the expense.

Scale tensor parallelism based on workload demands to achieve further gains.

FAQs

How does hardware acceleration help reduce latency in LLM streaming?

When it comes to reducing latency in LLM streaming, hardware acceleration is a game-changer. It utilizes specialized components like GPUs, TPUs, and custom AI accelerators to handle demanding computations far more efficiently than standard CPUs. These tools are purpose-built to tackle the heavy lifting required for large-scale model operations.

By shifting tasks such as matrix calculations and parallel processing to these advanced hardware solutions, systems can process data much faster. This means results are generated and streamed with less delay, delivering smoother, more responsive performance - especially critical for real-time applications.

How do token compression and smart context management help reduce latency in interactive applications?

Token compression and smart context management are two powerful methods to speed up response times in interactive applications that use large language models (LLMs).

Token compression works by shrinking the amount of data the LLM needs to process. This means computations happen faster, leading to quicker responses - all without compromising accuracy. It’s especially helpful for applications dealing with large inputs or those needing real-time interaction.

On the other hand, smart context management focuses on how information is organized during interactions. By prioritizing the most relevant details and cutting out unnecessary data, this approach reduces processing demands. The result? Faster, more efficient applications that are easier for users to engage with.

Why is tracking metrics like time-to-first-token (TTFT) crucial for optimizing LLM streaming performance?

Tracking metrics like time-to-first-token (TTFT) plays a key role in improving the performance of Large Language Model (LLM) streaming, as it directly influences the user experience. TTFT measures the time it takes for the system to generate the first response token after receiving a request, making it a vital indicator of responsiveness.

Keeping an eye on TTFT helps pinpoint potential slowdowns in your LLM setup. These could arise from hardware limitations, suboptimal software configurations, or delays caused by network latency. With this insight, teams can make specific improvements - like upgrading hardware, refining model configurations, or enhancing prompt engineering techniques - to deliver quicker and more seamless interactions for users.