Explore how JSON Schema enhances data validation and consistency for Large Language Models, streamlining workflows and improving integration.

César Miguelañez

May 5, 2025

JSON Schema helps ensure that data used with Large Language Models (LLMs) is structured, consistent, and validated. It defines clear rules for data formats and types, making it easier to manage inputs, outputs, and API interactions. Here's why JSON Schema matters for LLM workflows:

Control Outputs: Define the structure, data types, and constraints for LLM responses (e.g., string length, numerical ranges, or nested objects).

Validate Data: Catch errors early by enforcing rules for inputs and outputs, ensuring smooth data processing.

Standardize APIs: Maintain consistent communication between systems by standardizing request and response formats.

Example Use Cases:

Output Validation: Ensure LLM responses meet predefined formats (e.g., strings, numbers within a range).

Input Processing: Verify prompts and parameters before sending them to the model.

API Integration: Simplify system communication with clear and consistent data standards.

By using JSON Schema, you can automate validation, detect issues early, and maintain reliable data flow across systems. Whether you're managing simple or complex data structures, JSON Schema is a practical tool for improving data quality and consistency.

Main Advantages of JSON Schema for LLMs

JSON Schema streamlines the way data is handled in large language models (LLMs), offering a reliable and consistent framework for managing outputs. Here's a closer look at its key benefits.

Structured Output Control

With JSON Schema, you can define and enforce the exact structure of LLM outputs. This ensures uniformity across responses and allows for:

Defining specific data types and formats for each field

Applying conditional rules based on your requirements

Setting limits for characters or numerical values

Organizing nested objects for complex data structures

For example, take a look at how JSON Schema can manage structured outputs:

This level of control ensures your data remains consistent, predictable, and easy to process.

Data Validation Systems

JSON Schema plays a crucial role in maintaining data quality. It enables:

Early detection of improperly formatted data

Smoother error handling processes

Automated quality checks, especially useful for high-volume tasks

Consistent data flow across different systems

By catching issues early, JSON Schema helps ensure that data integrates smoothly across all platforms.

System Integration Standards

Using JSON Schema simplifies how system components communicate by standardizing data exchange. This leads to:

Consistent interfaces between LLMs and external services

Clear and detailed API documentation

Faster development cycles

Easier updates and upgrades to your system

When multiple systems interact with LLM outputs, standardization ensures that every component receives data in a usable format. This is especially critical in production environments where seamless communication is key.

Integration Aspect | Without Schema | With Schema |

|---|---|---|

Data Validation | Manual checks needed | Automated validation |

Error Detection | Occurs at runtime | Caught during development |

Documentation | Often incomplete | Self-documenting |

API Consistency | Varies | Guaranteed |

Integration Time | Longer | Shorter |

Setting Up JSON Schema for LLMs

Creating Your First Schema

Here’s an example of a basic JSON Schema setup:

This schema sets clear rules for validating LLM outputs. It ensures that the text field adheres to length limits and that the metadata section includes both a timestamp and a confidence score within specified ranges.

Output Validation Process

Component | Purpose | Implementation |

|---|---|---|

Schema Parser | Loads and interprets the JSON Schema | Relies on standard JSON Schema tools |

Validator | Verifies LLM output against the schema | Runs checks before storage or use |

Error Handler | Handles validation failures effectively | Produces error reports as needed |

These components operate in real-time, ensuring that data meets validation standards before it’s stored or transmitted. This process is key to maintaining reliable data flow in applications.

Using Validated Data

Once validation confirms the data meets the schema’s requirements, it can be integrated into your application with confidence.

Data Integration

Store validated outputs with proper indexing for easy retrieval.

API Implementation

Design endpoints that both accept and return data conforming to the schema.

Error Recovery

Add fallback strategies to handle cases where validation fails, ensuring smooth user experiences.

If you’re using Latitude’s platform, their built-in tools simplify schema validation. The platform takes care of schema versioning and offers real-time feedback during development, making it easier to catch and resolve issues early.

JSON Schema Implementation Tips

When working with JSON Schema, you can maintain data quality and enhance validation processes by following these practical tips.

Moving from Basic to Advanced Schema

Start with a simple schema to validate key outputs like text and score. Gradually add more detailed rules as your needs grow.

This approach allows for a step-by-step improvement in validation, ensuring your schema evolves to handle more complex use cases.

Managing Schema Versions

It's important to track schema versions using semantic versioning. Include a version identifier in your schema metadata for clarity:

Version Component | When to Increment | Example Change |

|---|---|---|

Major (X.0.0) | For breaking changes | Adding required fields |

Minor (0.X.0) | For new features | Adding optional fields |

Patch (0.0.X) | For fixes | Updating patterns or constraints |

By following this system, you ensure your schema evolves in a predictable and organized way.

Tools for Schema Validation

Several tools are available to simplify schema validation, each suited for different programming environments:

Tool | Primary Use Case | Integration Method |

|---|---|---|

TypeScript validation | Runtime type checking | |

Python data parsing | Model-based validation | |

High-performance JavaScript | Schema compilation |

To minimize runtime overhead, validate data at key stages such as input, response generation, output formatting, and storage. Pre-compile schemas during initialization and cache validation results for better performance.

For high-throughput scenarios, implement fallback strategies to handle validation errors gracefully:

These practices help maintain efficient, reliable schema validation across your application.

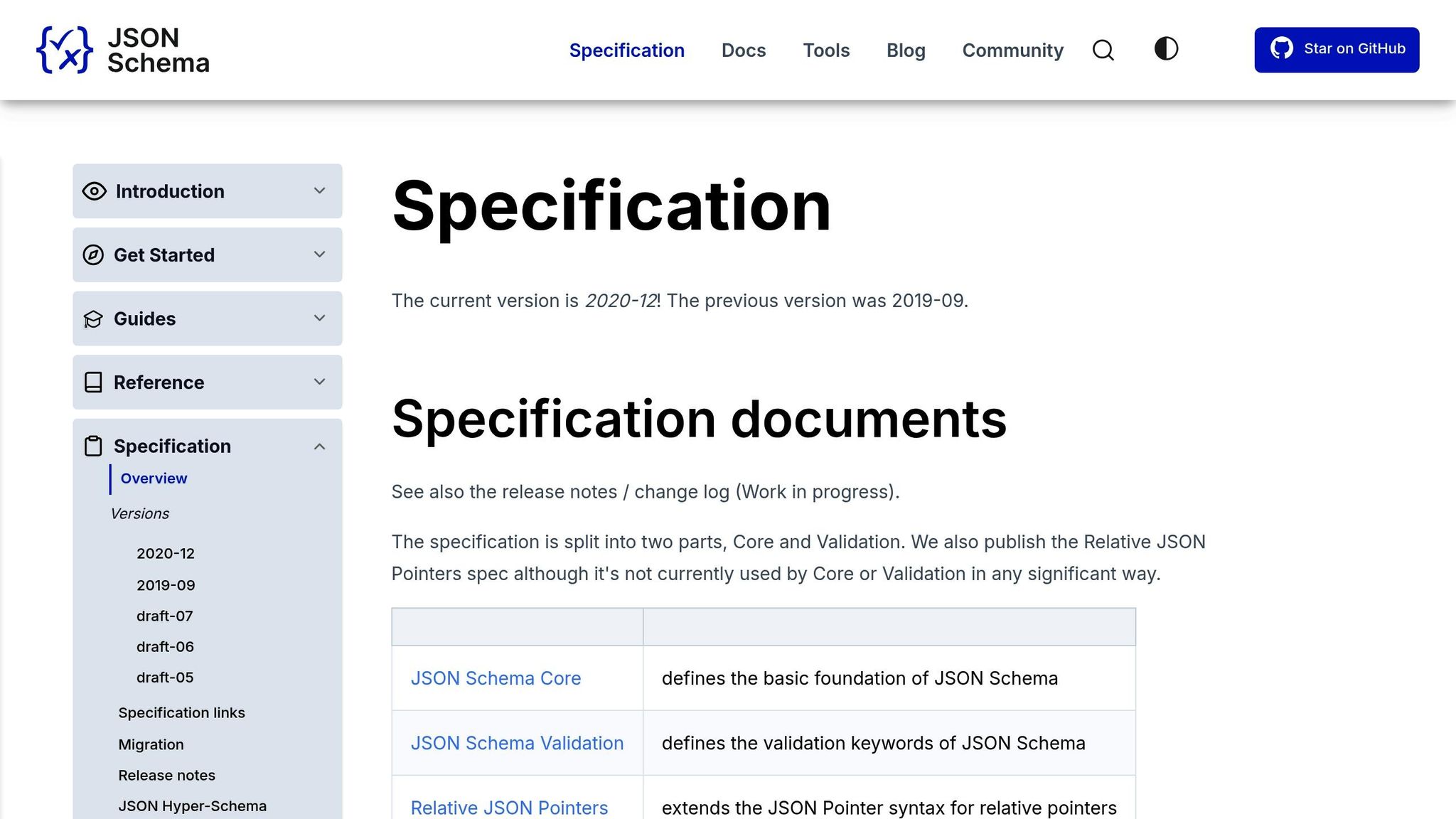

JSON Schema Features in Latitude

Latitude takes JSON Schema implementation to the next level by combining validation tools with collaboration and resource-sharing capabilities. Its open-source foundation enables teams to easily define and manage JSON schemas for LLM data validation.

Team Collaboration Features

Latitude provides shared workspaces and centralized communication tools that make teamwork smoother. These features are designed to work seamlessly with Latitude's development tools, ensuring teams stay connected and productive.

Development Resources

Latitude equips users with a variety of resources to simplify JSON Schema implementation, including:

Detailed guides on schema development

Ready-to-use examples and templates for integrating JSON Schema into production workflows

These tools and resources ensure high-quality data validation for LLM workflows.

Conclusion

Key Benefits Summary

JSON Schema offers a way to standardize and validate data for large language models (LLMs). By enforcing structured validation, it ensures consistent outputs, minimizing errors and inconsistencies in production systems. This approach simplifies reliable data management for teams working with LLMs.

Here’s what makes it stand out:

Automated validation ensures data consistency.

Standardized formats make integration smoother.

Early error detection catches issues before they escalate.

Next Steps with Latitude

Now that the advantages of JSON Schema are clear, Latitude provides tools to help you incorporate these practices into your workflow. Getting started is simple and involves three main steps:

Access Resources

Explore Latitude's documentation and examples to simplify JSON Schema implementation.

Use Team Features

Collaborate on schema development in Latitude's shared workspace.

Connect and Learn

Engage with Latitude's GitHub and Slack communities for support and strategy discussions.

FAQs

How does JSON Schema help standardize and optimize data for large language models (LLMs)?

JSON Schema helps ensure reliable and consistent data for large language models (LLMs) by defining clear, standardized data formats. This guarantees that the data structure is compatible with the requirements of LLMs, reducing errors and improving performance.

By using JSON Schema, developers can validate input data, enforce specific rules, and maintain uniformity across datasets. This is especially important when collaborating on production-grade LLM features, as it streamlines workflows and ensures compatibility across different systems.

How can I upgrade from a basic to an advanced JSON Schema for validating LLM data?

To move from a basic to an advanced JSON Schema for LLM data validation, start by identifying the specific requirements of your data. Advanced schemas often include stricter validation rules, nested structures, and custom formats tailored to your application's needs.

Here are some practical steps:

Expand validation rules: Add constraints such as required fields, data types, and value ranges to ensure data consistency.

Utilize nested schemas: Break down complex data structures into reusable components, making your schema modular and easier to maintain.

Incorporate custom formats: Define custom validation rules for domain-specific data, such as timestamps, currency values, or unique identifiers.

By refining your JSON Schema, you can standardize LLM data formats and improve compatibility across systems. This approach ensures your data is robust, reliable, and ready for production-grade applications.

How can I manage different versions of JSON Schema to ensure smooth integration with LLM data?

To effectively manage different versions of JSON Schema for seamless integration with LLM data, it's essential to adopt a versioning strategy. Clearly define version numbers in your schema files, and ensure backward compatibility whenever possible. This helps maintain consistency and prevents breaking changes when updating schemas.

Additionally, consider using tools or platforms that support schema validation and version control. These can automate compatibility checks and make it easier to collaborate on schema updates. Proper documentation of each version is also crucial for ensuring all stakeholders understand the changes and their impact on LLM data handling.