Explore effective A/B testing strategies for Large Language Models to optimize performance, enhance user experience, and address unique challenges.

César Miguelañez

Feb 7, 2025

A/B testing is essential for improving Large Language Models (LLMs). It helps compare model versions, refine user experience, and optimize performance using real-world data. However, testing LLMs comes with unique challenges like inconsistent outputs, subjective feedback, and ethical concerns.

Key Takeaways:

Why A/B Testing Matters: Boosts performance, uncovers bugs, and improves user satisfaction.

Challenges: Handling unpredictable outputs, analyzing subjective user feedback, and maintaining fairness and privacy.

How to Test: Define clear goals, select metrics, and set up robust infrastructure (e.g., Kubernetes for scaling, Prometheus for monitoring).

Analyze Results: Use statistical methods like t-tests and ANOVA, and combine quantitative metrics (e.g., F1 score) with qualitative feedback.

Avoid Mistakes: Ensure adequate sample sizes, control variables, and avoid premature changes.

Quick Comparison Table:

Aspect | Key Tools/Methods | Metrics |

|---|---|---|

Setup | Kubernetes, Git, PostgreSQL | System reliability, data accuracy |

Performance Tracking | Prometheus, Statistical Tests | Response time, accuracy |

User Feedback | Surveys, Logs | Satisfaction, engagement |

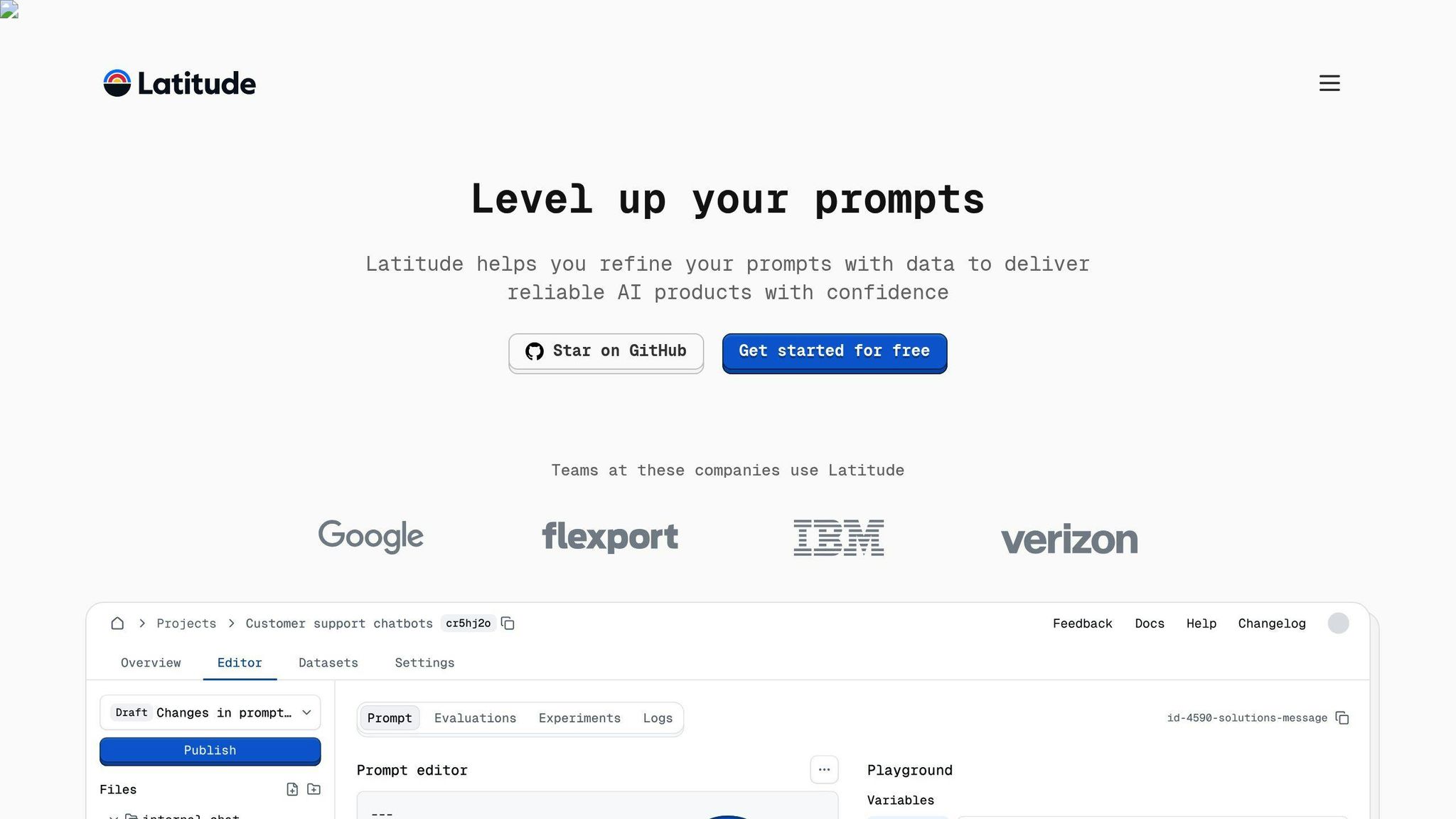

By following structured testing processes and leveraging tools like Latitude, you can ensure your LLM delivers consistent, high-quality performance while addressing challenges effectively.

Test Planning for LLMs

Careful planning is key to getting reliable and actionable A/B testing results for large language models (LLMs). Here's a breakdown of the essential components to help you prepare effectively.

Setting Goals and Metrics

Start by defining clear, measurable objectives for your LLM testing. These should align with both your business priorities and technical needs. Pair each goal with specific metrics. For instance, you might aim for a 10% boost in F1 scores compared to your current model.

Metric Type | Example Metrics | Measurement Method |

|---|---|---|

Technical Performance | F1 Score, Response Time | Automated evaluation |

User Experience | Satisfaction Rating | User feedback, System logs |

Business Impact | User Retention | Analytics tracking |

Selecting Test Parameters

With your goals and metrics in place, focus on identifying the variables that directly affect these outcomes. Key parameters include:

Model versions trained on different datasets

Variations in prompt engineering

Settings like temperature and other generation controls

Context window sizes

Formats for generated responses

Platforms like Latitude can help teams of engineers and domain experts collaborate, manage version control, and systematically test these parameters.

Test Size and Duration

Use power analysis tools to determine the ideal sample size and test duration to yield meaningful insights. Take into account:

The expected effect size

Your desired confidence level

Available resources

Patterns in user traffic

Be sure your tests reflect real-world usage patterns, account for external factors, and allow for model stabilization.

Pro tip: Calculate the minimum sample size needed to ensure statistical significance while keeping resource use efficient.

Document everything - test parameters, success criteria, monitoring plans, and backup strategies for unexpected issues. A detailed plan ensures smoother execution and lays the groundwork for successful test implementation.

Running LLM A/B Tests

Setting up a solid testing system is crucial for running A/B tests that provide useful insights into how your LLM performs. Here's how to build and maintain a testing process that works.

Test Infrastructure Setup

A good testing infrastructure relies on a few key components:

Component | Purpose | Key Tool |

|---|---|---|

Data Management | Organize user interactions and model outputs | PostgreSQL |

Performance Monitoring | Track metrics in real time | Prometheus |

Version Control | Keep track of model versions | Git |

Container Orchestration | Scale and manage test environments | Kubernetes |

Kubernetes is excellent for scaling and managing container-based LLM tests.

Once your infrastructure is ready, the next step is ensuring traffic is distributed fairly and consistently for unbiased results.

User Traffic Distribution

Using deterministic hashing helps assign users consistently, ensuring fair traffic distribution and stable testing conditions. Tools like HAProxy are great for managing traffic with precise routing and load balancing. Start with a simple 50/50 traffic split between your control and test groups to establish a baseline.

Once traffic is properly distributed, monitoring becomes essential to keep the test on track and gather actionable data.

Test Progress Monitoring

Monitoring is key to spotting issues early and collecting the data you need for decisions during and after the test. Focus on these key metric categories:

Metric Category | Specific Measures |

|---|---|

Performance | Response time, Accuracy |

User Engagement | Click-through rates, Session duration |

System Health | Error rates, Resource utilization |

Track performance metrics like response time and accuracy in real time, while user engagement metrics (e.g., click-through rates) can be reviewed hourly.

Set up automated alerts to catch major deviations quickly. Tools like Latitude can help track prompt performance and user interactions, offering insights into how different prompts perform.

If anomalies occur, investigate them carefully but avoid rushing to make changes that might compromise the test. Document everything - observations, issues, and any actions taken - to maintain the test's integrity and make future analysis easier.

Test Results Analysis

The focus here is to pull out insights that can directly improve model performance and enhance user experience.

Statistical Analysis Methods

To evaluate LLM test results effectively, start with hypothesis testing to see if observed differences are meaningful. Use t-tests when comparing mean performance metrics between two groups, like a control and a test model. For analyzing performance differences across several variables or user segments, ANOVA is your go-to method.

Analysis Type | Purpose and Metrics |

|---|---|

T-tests | Compare two LLM versions (e.g., Response time, Accuracy) |

ANOVA | Examine performance across multiple user segments |

Regression Analysis | Spot correlations in user engagement patterns |

Confidence intervals are also helpful - they offer a clear range to interpret results and guide decisions about deployment.

Combining Data Types

Numbers tell one side of the story, but user feedback adds essential context to evaluate how the model performs in practical scenarios.

Data Type | Example Metrics |

|---|---|

Quantitative | F1 scores, RougeL, Error rates |

Qualitative | User satisfaction, Response quality |

System | Resource usage, Latency |

Digging into error patterns can reveal deeper issues, like biases in the training data that might be affecting results.

Analysis with Latitude

Latitude simplifies the often-complex task of analyzing LLM outputs. Its tools are designed to streamline the process by:

Tracking prompt performance metrics in real time

Integrating test results directly into the development pipeline

Highlighting performance trends to support smarter, data-backed improvements

This makes it easier for teams to collaborate and act on insights without delays.

Improving from Test Results

Implementing Test Winners

Before rolling out a winning test variant to all users, it's crucial to confirm its performance across both quantitative metrics (like accuracy and response time) and qualitative metrics (such as user satisfaction). Using feature flags can make this process smoother by enabling phased rollouts. This approach allows teams to monitor performance at each stage and reduce risks. For example, start with 10% of traffic, then gradually increase to 25%, 50%, and finally 100% - but only if performance stays consistent.

Implementation Phase | Key Actions | Success Indicators |

|---|---|---|

Pre-deployment | Validate results, prepare rollback plan | Consistent performance, statistical significance |

Gradual Rollout | Use feature flags, monitor key metrics | Stable results across user groups |

Full Deployment | Scale to all users, track long-term impact | Sustained improvements, positive user feedback |

Beyond simply rolling out changes, it's important to encourage a testing mindset across the organization to ensure long-term progress.

Building Test-Driven Teams

A team focused on testing ensures that every update to your system is based on solid data. This minimizes errors and boosts efficiency. To make this happen, assign clear responsibilities for testing outcomes and establish regular testing cycles with specific goals tied to business objectives.

Tools like Latitude's real-time tracking and integration features can help teams collaborate effectively and stay aligned.

Here are some key practices for building strong, test-focused teams:

Create structured frameworks: Set measurable goals for test design and execution.

Invest in training: Regularly update your team on the latest testing tools and best practices.

Even with a great team and solid processes, it's important to watch out for common testing mistakes that can compromise results.

Common Testing Mistakes

Some common errors in testing can undermine your results. For example, using an insufficient sample size can produce unreliable outcomes, while poorly designed tests may fail to account for external factors.

Common Mistake | Impact | Prevention Strategy |

|---|---|---|

Insufficient Sample Size | Unreliable conclusions, false positives | Calculate sample size in advance |

Poor Variable Control | Confused results, unclear causation | Enforce strict test controls |

Premature Optimization | Wasted effort, misleading insights | Define clear stopping criteria |

To ensure reliable results, always confirm statistical significance before making decisions. Avoid cutting tests short, as this can lead to false positives and subpar optimizations.

Conclusion

Main Testing Guidelines

A strong testing framework includes unit testing, functional testing, regression testing, and performance evaluation. To achieve reliable results, teams should balance quantitative metrics with qualitative feedback by focusing on these core areas:

Testing Component | Implementation Focus | Success Metrics |

|---|---|---|

Infrastructure Setup | Controlled test environments, monitoring tools | System reliability, data accuracy |

Quality Assurance | Bias detection, fairness assessment, content control | Ethical compliance, user safety |

Performance Tracking | Response time, resource utilization | Operational efficiency, cost control |

User Feedback Loop | Explicit and implicit feedback collection | User satisfaction, feature adoption |

By concentrating on these elements, teams can refine their testing processes to handle the challenges of modern LLM applications.

Next Steps in LLM Testing

To stay competitive, organizations need to embrace advanced methods and tools that improve collaboration between experts and engineers. Platforms like Latitude help streamline prompt engineering workflows and enable effective testing in production environments.

To push LLM testing forward, teams should focus on automating testing pipelines, integrating continuous user feedback, and encouraging collaboration between technical and business teams. Future testing efforts will require flexibility and a commitment to high-quality standards. Teams that prioritize well-structured testing frameworks and collaborative tools will be better equipped to deliver dependable, high-performing LLM solutions.

FAQs

How to test your prompts?

Testing prompts is essential for improving LLM performance and ensuring user satisfaction. A well-structured process combines the right tools and methods to analyze and optimize results effectively.

Testing Component | Tool/Method | Key Metrics |

|---|---|---|

Request Logging | Usage, Latency, Cost, TTFT | |

Performance Analysis | Statistical Methods | Response Accuracy, User Feedback |

Optimization Tools | Latitude | Collaboration, Version Control |

For logging key metrics like usage and latency, tools such as Helicone are invaluable. Use statistical methods to assess response accuracy and user feedback, and rely on platforms like Latitude for collaboration and version management.

To get reliable results, focus on controlled prompt variations, consistent data collection, and thorough statistical analysis. Be mindful of external factors that could skew results, such as small sample sizes or short testing periods.

Platforms like Latitude also help bridge the gap between domain experts and engineers, making it easier to develop and refine prompts in production environments. By fine-tuning your testing approach, you can ensure your LLM delivers consistent, high-quality outputs and sets the stage for broader A/B testing efforts.